Archive for the 'Yellowstone' Category

Yellowstone Tester Update

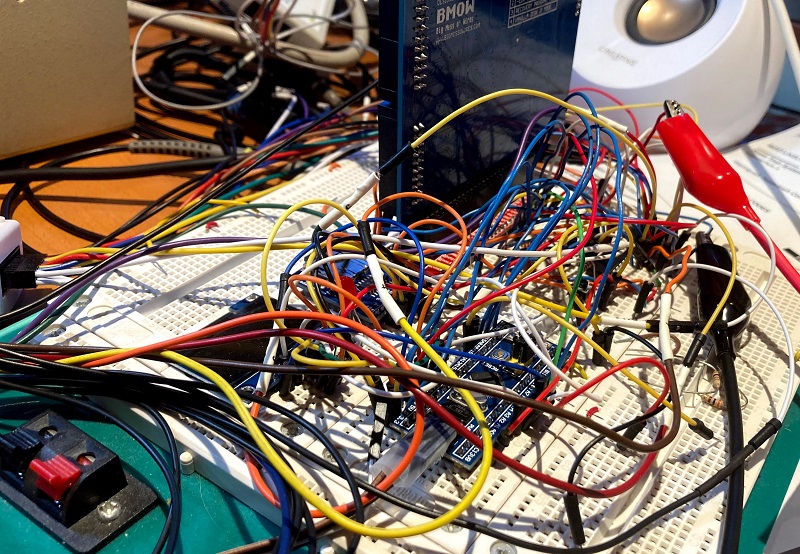

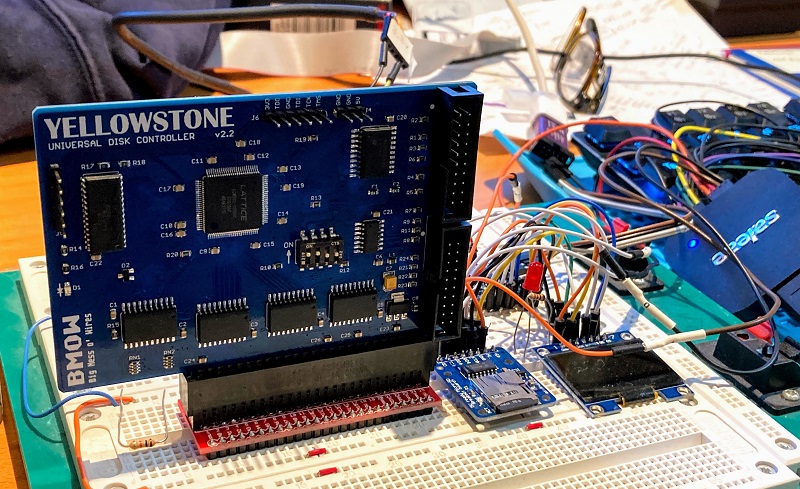

For the past couple of weeks I’ve been developing an automated tester for Yellowstone. My goal is to streamline the program and test process for Yellowstone beta cards, and eventually for the first production run. The ugly tangle of wires shown here may not look like much, but I’ve used it to successfully program a card with a blank FPGA, and to simulate 6502 bus cycles for reading and writing from the card’s memory. I was surprised to discover that those looping crisscrossed wires still work reliably even at speeds as high as 10 MHz. I was also able to measure the current consumption of a Yellowstone card at idle, plus the test hardware itself, which is about 80 mA. That’s lower than I’d guessed.

I think I’ve stretched this breadboard prototype about as far as it will go, even though many things remain incomplete. There’s still no testing of the disk drive interfaces, nor have I implemented any of the short-circuit detection that I discussed here previously. Those pieces will have to wait for the final version of the tester, when it’s built into a nice PCB instead of a snarl of loose wires. My next task is to take what I’ve built on the breadboard here, and convert it into a PCB, and add the missing elements for testing drive interfaces and short circuits. There’s still a lot of software to write as well. I’m guessing the whole process may take about four weeks, including the PCB manufacturing time.

Once that’s done, I can hand-assemble a few Yellowstone beta cards and use the finished tester to program and verify them. If all goes well, I may be able to wrap up Yellowstone development by Halloween.

Read 3 comments and join the conversationShort Circuit Current Experiments

A couple of weeks ago I shared some thoughts about an automated tester for Yellowstone boards, including how to test for short circuits. One of the questions that grew out of the resulting discussion was whether short circuits could only be detected by functional test failures (test results don’t match expected results) or if they could also be directly detected by measuring the supply current. A second question was how large the short circuit currents would typically be. I’ve recently done some tests to help answer these questions.

There are several different kinds of shorts that we need to think about:

- shorts between power supplies, where short circuit current flows whenever the power is on

- shorts between a signal and a power supply, where short circuit current only flows for certain signal values

- shorts between two signals, where short circuit current only flows for certain pairs of signal values

- shorts where the current source comes from the device being tested

- shorts where the current source comes from the equipment that’s connected to the device

Most of Yellowstone’s external IOs pass through 74LVC245 buffers. All of the tester’s IOs will pass through MCP23S17 port expanders. So I intentionally short-circuited some samples of both chips, alone and then together, to see what would happen. Here’s what I found.

- 74LVC245 with a 3.3V supply, and one logical high output short-circuited to ground: 70 mA short-circuit current

- 74LVC245 with one logical low output short-circuited to 3.3V: 110 mA

- MCP23S17 with a 5V supply, and one logical high output short-circuited to ground: 30 mA short-circuit current

- MCP23S17 with one logical low output short-circuited to 5V: 50 mA

- 74LVC245 logical high output short-circuited to MCP23S17 logical low output: 50 mA

- 74LVC245 logical low output short-circuited to MCP23S17 logical high output: 30 mA

These currents are all large enough that it should be possible to reliably measure and detect them as deviations from the normal supply current (expected to be something in the range of 100 to 200 mA). So is this a good approach? Will it work? I’m going to try it and see, and if it’s successful then the tester will be that much more useful. If it’s not successful, I won’t have lost anything but some time and a few dollars worth of parts.

Why do this at all? Isn’t functional testing enough, with the assumption that any short circuit will cause a test failure somewhere? I say… maybe. In a perfect world, any short circuit would result in a functional test failure, but I don’t live in a perfect world. If I can do something to help detect shorts that functional tests missed, or that don’t result in test failures 100 percent of the time, I think that’s a good thing.

For how long do I need to measure the current? If the tester changes some IO values, waits a microsecond, and then measures the new supply current, is that enough? Or will capacitors on the voltage supplies smooth out the current from short circuits, so that microsecond-level measurements aren’t useful and I need to wait milliseconds or longer to get useful data? I’ll just have to try it and see.

If I measure the current, which current should I be measuring? I’m not quite sure. It might seem that I should measure the 5V supply current for the Yellowstone board (the device being tested). That would catch problems where there’s a short circuit between two elements on the Yellowstone board, whether they’re signals or supplies. It would also catch problems where a Yellowstone input signal was shorted to 3.3V or 5V, creating short-circuit currents whenever the connected equipment tries to drive a logical low to the input. But it wouldn’t catch problems where a Yellowstone input was shorted to ground.

In a case like that, short circuit current would flow whenever the connected equipment tries to drive a logical high value to the input. But since the current would be coming from the connected equipment, and not the Yellowstone board, measuring the Yellowstone supply current wouldn’t help. Maybe this is a rare enough case that I shouldn’t worry about measuring these currents, and I can just assume any such problems will be caught by a functional test.

Read 1 comment and join the conversationYellowstone: Ready for Takeoff

After several days of extensive testing with the latest Yellowstone prototype, and several years of development work on this universal Apple II disk controller concept, I’m here to say that Yellowstone v2.2 is looking good. Looking very good. It’s the bee’s knees, the cat’s pajamas, the whole package; it’s a humdinger, a totally gnarly wave; it’s crackerjack, boffo, hella good, chezzar, d’shiznit, on point, and just plain bombdiggity. I’ve thrown a dumpster-load of assorted drives and disks at it, and it’s all working nicely. Finally everything has fallen into place, and I’m excited for what comes next. Here are some feature highlights:

- Supports most Apple II and Macintosh disk drives, including 3.5 inch floppy, 5.25 inch floppy, Unidisk 3.5, and Smartport hard drives

- Compatible with Apple II+, Apple IIe, and Apple IIgs

- Maximum of 2 standard disk drives, or up to 5 drives when mixing smart and standard drives

- 20-pin ribbon cable or 19-pin D-SUB connectors

- Works in any slot

- Disk II compatibility mode for tricky copy-protected disks

- User-upgradeable for future feature enhancements

Yellowstone supports basically every disk drive ever made for the Apple II or Macintosh, whether external or internal. The only exception is the single-sided 400K 3.5 inch drive used in the original 1984 Macintosh. The list of drives includes:

- Standard 3.5 inch: Apple 3.5 Drive A9M0106, Mac 800K External M0131, Apple SuperDrive / FDHD Drive G7287 (as 800K drive), internal red-label and black-label 800K drives liberated from old Macs, internal auto-inject or manual-inject Superdrives (as 800K drive), and probably also third-party 3.5 inch drives from Laser, Chinon, AMR, Applied Engineering, and others.

- Standard 5.25 inch: Disk II A2M0003, Unidisk 5.25 A9M0104, AppleDisk 5.25 A9M0107, Disk IIc A2M4050, Duo Disk A9M0108

- Smart drives: BMOW Floppy Emu Smartport Hard Disk emulation mode, Unidisk 3.5 A2M2053

- Drive emulators: BMOW Floppy Emu, wDrive, etc.

With these latest test results, we’re almost at the point where other people can start to get their hands on Yellowstone cards. There are surely still some minor bugs, quirks, and annoyances yet to be discovered, and maybe some larger problems too. I need to get a few Yellowstone prototypes to beta testers, so they can try the cards with their equipment, and help find any remaining issues.

My priority now is to finish the automated tester that I’ve been developing. As I hand-assemble a few more prototype boards, I’ll test them in the automated tester, proving both the tester and the board at the same time. That means delivery of boards to beta testers will proceed more slowly than if I weren’t developing an automated tester, but I think this is necessary so that I can eventually scale up manufacturing for a general public release. I’m guessing there may be four to six weeks needed to finish off the automated tester and build it, but hopefully I can squeeze out at least a couple of hand-verified prototype boards much sooner than that.

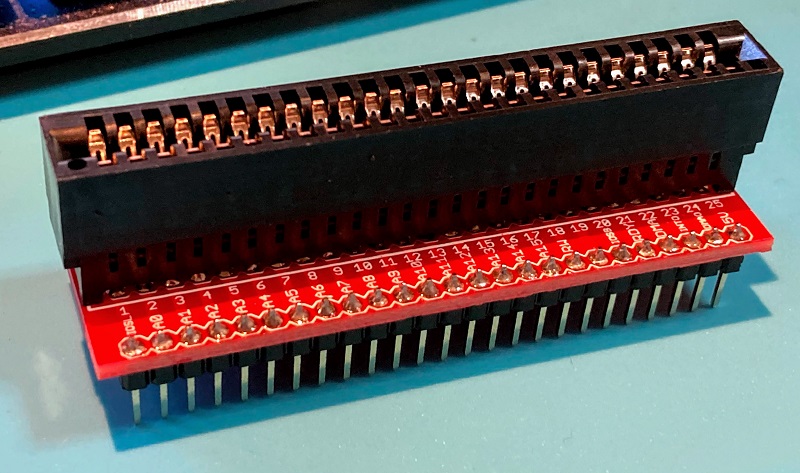

The last piece of this puzzle will be securing a large enough supply of DB-19 female connectors, and designing a detachable Yellowstone adapter for them. I have a couple of DB-19F adapters that I’ve been using for testing, but DB-19F connectors are rare and hard to find. The ones I have now use a DB-19F with solder cups, but the type with PCB pins seems to be more common, if you can find them at all. I’ve stashed a modest supply of DB-19F connectors that’s enough for an initial Yellowstone production run, but the outlook is uncertain beyond that. I may have to commission 10000 new ones like I did with the DB-19 male.

These are exciting times! Thanks for following along this journey with me.

Read 9 comments and join the conversationYellowstone 2.2 First Look

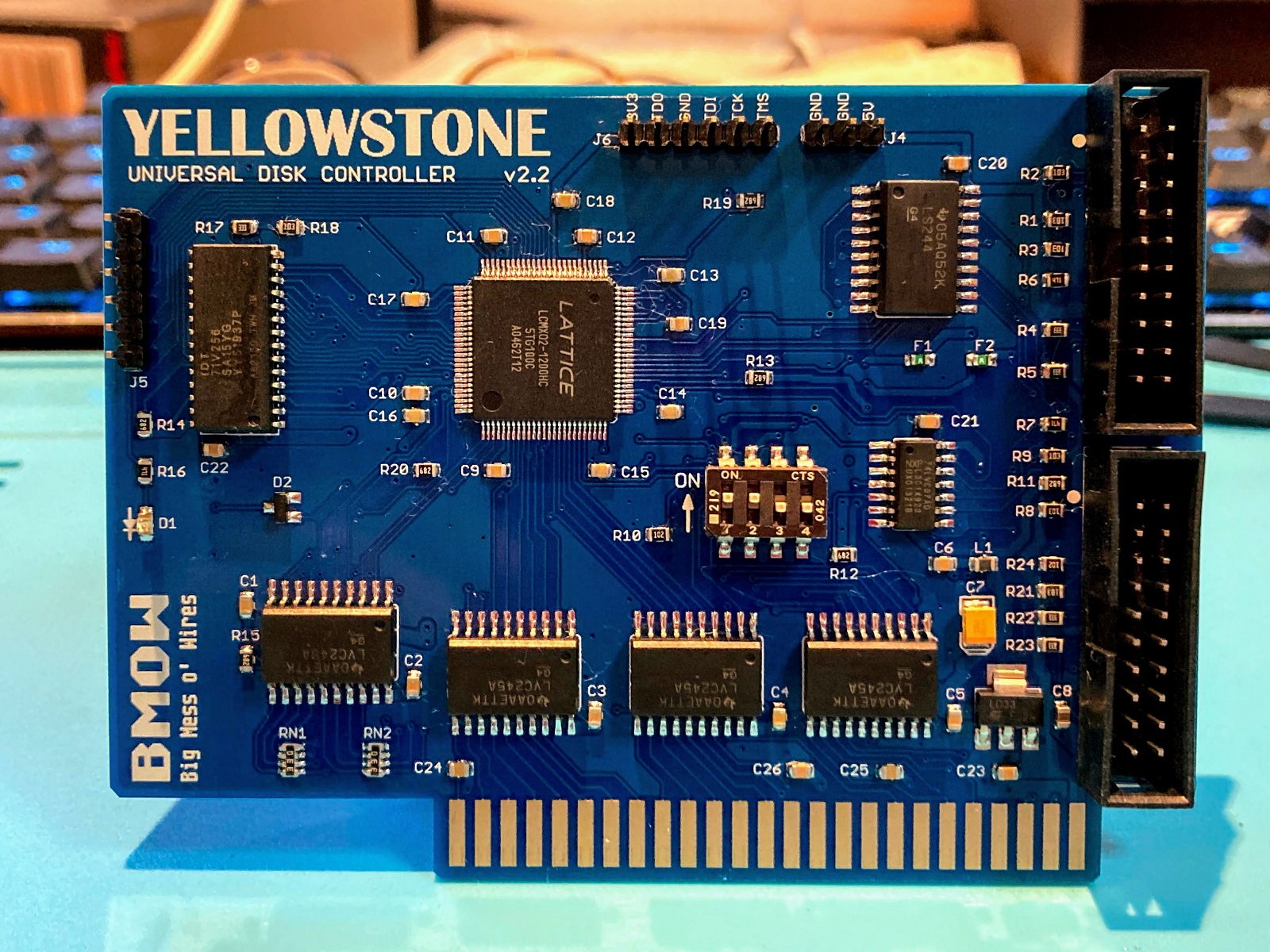

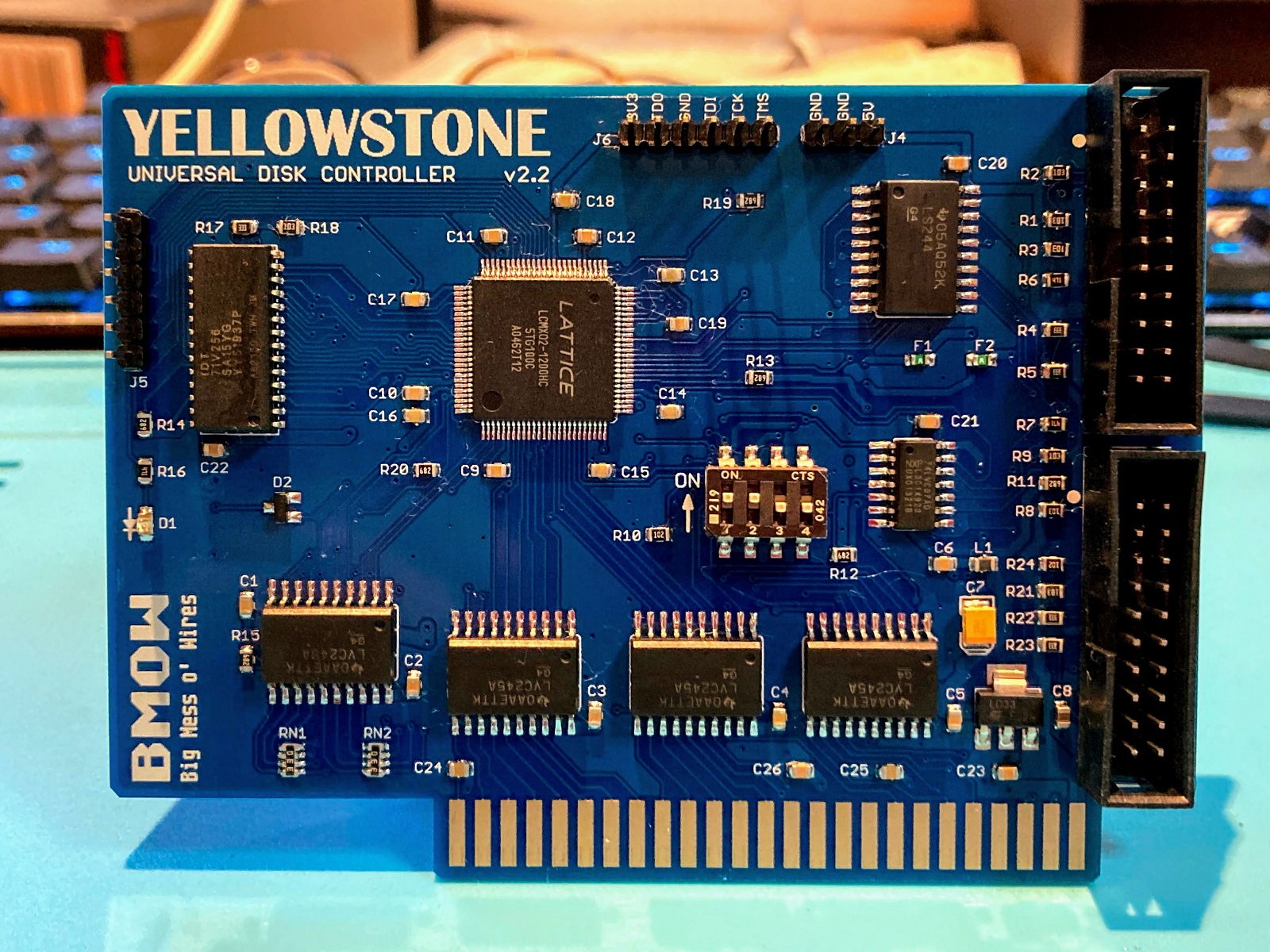

Yellowstone version 2.2 is up and running! If you don’t remember what’s changed in v2.2, well… that makes two of us. Luckily we can both go read this August 20 post for a reminder. Hand assembly took about two hours, and this was the first time any of the Yellowstone prototypes have worked straight away without needing any troubleshooting or fixes. The board has only been through one superficial test so far, confirming the ROM contents and booting a 5.25 inch disk, but I’m feeling optimistic it’s a winner.

After going through board v1.0, v2.0, v2.1, and v2.2, I’m sincerely hoping that this will be the final hardware version. The return of the DIP switch is the most visible change here – now the much-discussed -12V supply can be manually enabled or disabled. Leaving it enabled will almost always be OK, but there are a couple of polyfuses to protect the hardware just in case. There’s also a fix in v2.2 for a minor problem that prevented an Apple 3.5 Drive’s eject button from working if the drive was plugged into Yellowstone’s connector #2.

There are still some software issues to clean up, but (crossing my fingers) I think I can see the finish line now.

Just for fun, here’s a shot of the new Yellowstone board along with all of the disk drives I’ve amassed for testing. All of these will work with Yellowstone, and many others too.

And a bonus feature: here’s a peek at a 50 pin Apple peripheral card breakout board that I whipped up. It has all the signals labeled, because I don’t trust myself while debugging. With this breakout board, it’s possible to run a peripheral card on a breadboard instead of inside the Apple II. That’s exactly what I’m doing with the work-in-progress version of the Yellowstone tester shown here.

Testing For Short Circuits

I’m still working on the design for an automated Yellowstone tester. The general idea is to use an STM32 microcontroller that’s connected to all the Yellowstone board’s I/O signals, then drive the inputs in various combinations and verify the outputs. This should catch a lot of potential problems, including open circuits, missing components, and many instances of defective components. But what about short circuits on the Yellowstone board that’s being tested? These aren’t so simple to detect, and they can potentially damage the tester.

The trouble with short circuits is that they can’t really be tested by working with digital signals alone – they force the whole problem into the analog domain. Depending on what’s shorted, and the effective resistance of the short, the behavior can vary greatly. A direct +5V to ground short might blow a fuse, or prevent the tester from even initializing if it shares a common supply. Other shorts might occur between a signal and any supply voltage (+5, -5, +12, -12), or between two supply voltages, or between two signals. The shorted signals might be driven from the tester, or from a chip on the Yellowstone board, or one of each. A short might cause immediate failure somewhere, or it might just cause a signal’s voltage to get pulled down or up. Depending on the change in voltage, it might not be enough to flip a bit and create a detectable error. Lots of components can also likely survive a short circuit for a while, but they’ll get hot, and will probably fail later. How can the tester detect these?

Assuming there’s a fuse, what type should it be? A replaceable fuse will be annoying if boards with shorts are at all common, since the fuse will need replacement often while testing a batch of boards. A poly fuse is resettable, but it can take about an hour to cool down enough to reset, rendering the tester unusable during that time. Neither option seems great.

If there’s a fuse, what should be fuse-protected? A fuse on the board’s 5V supply won’t do anything if there’s a short between an input signal and GND, because the current will be sourced by the tester and not by the Yellowstone board. Nor will it do anything for shorts on the other supply voltages. A short between signal and supply or between two signals may or may not be protected by a fuse, depending on where the current is ultimately sourced.

What max current rating is appropriate for a fuse? The “safe” current level could vary widely depending on what supply or signal is considered, and the current state of all the I/O signals. Then there’s there the 3.3V regulator on the Yellowstone board, which has its own overcurrent protection mechanism. If there’s a short between 3.3V signals, the regulator will limit the current, and a fuse or other mechanism on the tester may not even realize that a short circuit occurred.

My head is spinning just trying to map out the possible short circuit cases, and how to detect and prevent them. At the same time, I’m trying to design a tester that strikes a good compromise between complexity and thoroughness. Maybe I could design a tester with a dozen different fuses and current-monitoring ICs and IR thermal sensors, and it would be great at detecting short circuits, but it would be too much complexity and effort for a small-scale hobbyist product. My goals are to avoid damaging the tester, and to detect a large majority of common board failures including short circuits, while recognizing that it will never be 100 percent perfect.

Read 16 comments and join the conversationSmartport Cleanup

The Yellowstone Smartport problems that I discussed last week should all be resolved now. Small fixes to big problems. As usual the main challenge was determining exactly what the problem was and how to reproduce it. After that, the fix hopefully becomes clear. For those keeping score at home, here’s a recap:

3.5 Inch Disk Activity Caused Accidental Smartport Resets and Enables – This was the original topic of last week’s post. I’d somehow failed to consider what would happen if a 3.5 inch drive and an intelligent Smartport drive were attached on parallel drive connectors with shared I/O signals. Due to the complexities of Apple’s scheme for enabling different types of drives, with this drive setup it’s possible for normal 3.5 inch disk activity to also accidentally trigger a Smartport reset or to enable a Smartport drive. It turned out that Yellowstone was almost working despite this, which is why I hadn’t noticed any problems in my earlier tests. But once I knew what to look for, I was able to see evidence of problems and accidental resets during particular patterns of disk access.

The best solution to this problem would probably be eliminating parallel drive connectors with shared I/O signals, and using a single serial daisy-chain of drives instead, like Apple does. But that comes with its own set of limitations, and at this point Yellowstone is pretty well married to the parallel connectors idea.

My solution was to review the Yellowstone firmware, looking for specific places where 3.5 inch drive activity might cause accidental Smartport resets or enables. In theory there could be many places where it happens, but thanks to some very lucky coincidences, it’s actually quite rare. I only found one instance where an accidental reset would occur, and I was able to eliminate it by modifying the way Yellowstone accesses the internal registers of 3.5 inch drives. I wasn’t able to eliminate some places where accidental enables would occur, but after carefully reviewing everything, I concluded they were harmless.

Smartport Writes Caused Strange Behavior or Crashes – This took a very long time to isolate, because it displayed all sorts of odd behavior that was slightly different under GS/OS, ProDOS, and BASIC. Eventually I discovered that saving a BASIC file to a Smartport disk would outright crash 100 percent of the time, which made it easier to track down the issue. Thanks to revision control, I was able to dig back through weeks of prior changes and find one change from mid-July that subtly broke Smartport writes.

Yellowstone has a 32K SRAM, of which only 2K is used, and back in July I made some small changes to enable access to the remaining 30K for debugging purposes. While doing that, I confused two variables with similar names, with the surprising result that one byte of RAM would consistently be read incorrectly. This byte was normally only used for Smartport write buffering. Depending on what data was written to the Smartport disk, this may or may not have caused any immediate and obvious problems. Instead it caused subtle data corruption.

Unexplained Cycles of Repeatedly Resetting and Reinitializing the Smartport – Even after eliminating accidental Smartport resets caused by normal 3.5 inch disk activity, another similar problem remained. Very often I would see cycles where the Smartport was reset and reinitialized 10+ times, when using a Smartport drive in a mixed system along with other drive types. Unlike the original problem that began last week’s investigations, the logic analyzer showed these were genuine Smartport resets and not some accidental signal glitch.

The difficulty with this problem was finding a concise and coherent description of it. At first I thought it was a GS/OS problem, since I couldn’t initially reproduce it under ProDOS. Then I thought maybe it actually was a signal glitch, but of a different kind than I’d seen earlier. I had a theory it was related to earlier changes I’d made to the status handler for 3.5 inch disks. All of this was wrong.

After lots more testing, I was able to restate the problem description as this: after any 3.5 inch read or write operation, a Smartport reset and reinitialize would occur before the next Smartport communication. It didn’t seem to be something that was happening because of an error, but rather it looked like some state information was causing it to intentionally perform a reset and reinitialize.

Digging through the UDC ROM on which Yellowstone is based, I eventually found some code doing exactly that. If the most recently accessed drive was not a Smartport drive, then it would reset and reinitialize all the Smartport drives before the next Smartport communication. I don’t know why, but I’m guessing it may have been a flawed attempt to resolve the same issue I ran into with accidental Smartport resets and enables. Fixing accidental resets with intentional resets doesn’t seem like much of a fix to me. Since I already solved the accidental reset problem another way, I simply removed this bit of code, and the problem disappeared.

I’m hoping this is the end of mysterious Smartport problems, at least for a while. Now, where was I?

Be the first to comment!