Reflections on Orders of Magnitude

This week I upgraded my home internet service to 10 Gbps, and not because I needed faster speeds, but because it was simply cheaper than my existing 400 Mbps service. A 25x speed improvement for less money? Yes I’ll take that, thank you! This upgrade started me thinking about how much computer technology has improved over the decades. When I was a kid, I often thought that the most interesting period of technological advancement was 50 or 100 years in the past: when a person could be born into a world of horse-powered transportation and telegraphs, and retire in an era of intercontinental air travel and satellite communications. Yet the changes during my lifetime, when measured as order of magnitude improvements, have been equally amazing.

Processor Speeds – 6000 times faster, about 4 orders of magnitude

The first computer I ever got my hands on was my elementary school’s Apple II, with a 1 MHz 6502 CPU. Compare that to Intel’s Core i9-13900KS, which runs at 6.0 GHz without overclocking, for a nice 6000-fold improvement in clock speed.

Raw GHz (or MHz) is a weak measure of CPU performance, of course. Compared to the 6502 CPU, that Intel i9 has much wider registers, more registers, more complex instructions, superscalar instruction execution, hyperthreading, multiple execution cores, and other fantastic goodies that the MOS designers could scarcely have dreamed of. I’m not sure if there’s any meaningful benchmark that could directly compare these two processors’ performance but it would be an entertaining match-up.

As impressive as a 6000x improvement may be, it’s nothing compared to the improvements in other computer specs.

Main Memory – 1.4 million times larger, about 6 orders of magnitude

In 1983 my family purchased an Atari 800 home computer, with a huge-for-the-time 48 KB of RAM. What’s the modern comparison? Maybe this pre-built gaming PC with 64 GB of RAM? That’s more than a million times larger RAM capacity than my old Atari system, but it’s not even very impressive by today’s standards. Windows 10 Pro theoretically supports up to 2 TB of RAM.

Communication Bandwidth – 33 million times faster, about 7 orders of magnitude

Who doesn’t love a 300 bps modem? It was good enough for getting that Atari system communicating with the wider world, connecting to BBS systems and downloading software. But today’s communication technologies are so much faster, it makes my head spin. What am I ever going to do with 10 Gbps internet service… stream 200 simultaneous 4K videos? Download all of Wikipedia, ten thousand times every day?

To put this in perspective, the amount of data that I can now download in one minute would have needed 68 years with that Atari modem.

External Storage – 182 million times larger, about 8 orders of magnitude

Over the past few decades, the champion of computer spec improvements has undoubtedly been external storage. My Atari 1050 floppy drive supported “enhanced density” floppy disks with 130 KB of data. While that seemed capacious at the time, today a quick Amazon search will turn up standard consumer hard drives with 22 TB storage capacity, for a mind-bending improvement of 182 million times over that floppy disk.

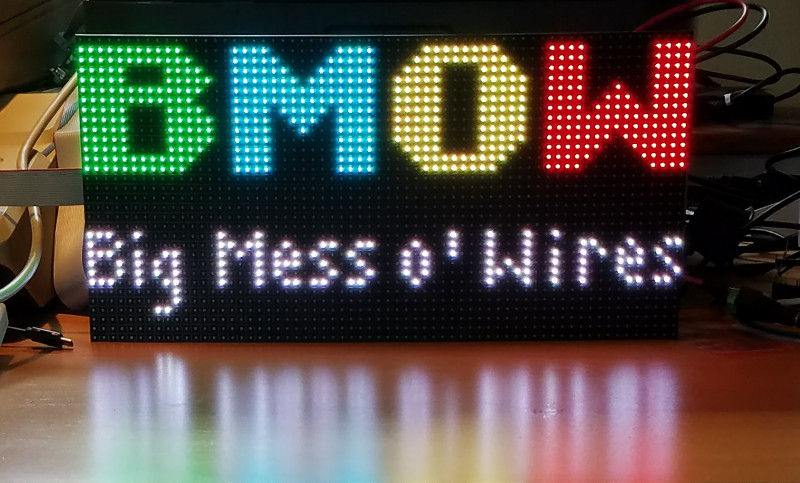

If you’re a vintage computer collector or a BMOW Floppy Emu user, then you’re probably familiar with the fact that the entire library of every Apple II or classic Mac program ever made could fit on a single SD memory card today.

Hardware and Software Cost – 0 orders of magnitude

Despite all these million-fold improvements in computer performance, hardware today still costs about the same as it did 40-some years ago when that Atari system was new. The Atari 800’s launch price in 1979 was $999.95, roughly the same price you’ll pay for a decent Mac or Windows laptop today. The Apple II launched at $1298 in 1977, and the first Macintosh cost $2495 in 1984. In real dollar terms (adjusted for inflation), the cost of computer hardware has actually fallen substantially even while it’s improved a million-fold. That first Macintosh system’s cost is equivalent to $7428 in today’s dollars.

Software prices have also barely budged since the 8-bit days. The first version of VisiCalc (1979) was priced at $100, and today Microsoft Excel is $159. As a kid I mowed lawns to earn enough money to buy $40 Atari and Nintendo games, and today a kid can buy PS5 or Nintendo Switch games for $40. Why? I can’t buy a cheeseburger or a car or a house for 1983 prices, but I can buy the latest installment of the Mario game series for the same price as the original one?

Looking Ahead

If there’s anything I’ve learned from the past, it’s that I stink at predicting the future. Nevertheless, it’s fun to extrapolate the trends of the past 40 years and imagine the computers of 2063, if I’m fortunate enough to live to see them. 40 years from now I look forward to owning a home computer sporting a 36 THz CPU, with 90 petabytes of RAM, a 330 petabyte/sec internet connection, and 3 zettabytes of storage space, which I’ll purchase for $1000.

Read 4 comments and join the conversation4 Comments so far

Leave a reply. For customer support issues, please use the Customer Support link instead of writing comments.

A joke from my computer engineering prof, circa 1993 (not sure who originated it):

What’s the difference between hardware and software?

As time goes by, hardware gets smaller, faster, and cheaper. Software gets bigger, slower, and more expensive.

Trying to judge whether “decent” computers have gotten more or less expensive over time is difficult, because there’s really no limit as to how much one can spend on a computer, nor is there any clear line as to how fancy something must be to qualify as “a decent computer”. If one wanted a minimal-cost machine that emulate an Apple //c with a FloppyEmu but a broken floppy drive, and one had a spare keyboard and a composite monitor, the total hardware cost to hand-build something based on pre-assembled components would probably be under $15.

I think the real issue with price has to do with how much people are willing to pay for “a decent computer”, with the specs of “a decent computer” representing the level of machine that’s available for that price.

As for retail software, there’s a price below which retailing software doesn’t really make sense, especially given the amount of free and open source software available. It’s not worth bothering to write a retail software program to compete with a free program unless it would offer users sufficient benefit to justify a certain minimum price.

With both hardware and software, however, there’s another trend which makes it hard to evaluate pricing: privacy. The $15 Apple //c clone I described wouldn’t spy on its users, but hardware and software which gathers information about users can be had for a steep discount compared with products that don’t. It’s hard to know what things would cost in a marketplace that wasn’t subsidized by such data gathering.

In early 2015, I read in a prime number website, that the author almost did not include a download link for the first N million primes as they could be calculated faster than downloaded.

I did not believe this,so I wrote a simple C program to use the Sieve of Eratosthenes to generate the first million primes. Not the fastest algorithm, in a high level language, so I had some preconceived notion of how slow it would be.

It took less than 5 seconds, including writing it to a file. I did a compare with the downloaded first million, and they matched.

I had new appreciation for the speed of then modern processors.

I often think about the same things that you mention in this post. It blows my mind to think of how much computer performance has improved since just the 1980’s.

Like you, the first computer that I ever used was an Apple II+ back when I was in grade school. I still remember using the load, run and brun commands to navigate the menus get things to work.

I was kind of lucky as far as computers during this time period as my parents worked at a local store called Northeast Computers. I remember going in to visit and playing with computers such as the Apple Lisa! I loved that machine….I wonder if the store every sold that many of them……

Anyways, in about 1985, my dad was able to purchase an original Macintosh 128k with an Image Writer 1 printer for the then amazing price of $1000 from the store. In a time when many of my fellow classmates were typing up school reports on typewriters, I had the luxury of using MacWrite, which while rather primitive by today’s standards (it didn’t have a spell checker, for example) it gave one the ability to easily edit a document, as opposed to having to use white-out with a typewriter.

My old computer had two 400k floppy drives (the internal and an external, I still have the case for the external drive) which was an immense amount of storage space for a personal computer at the time. I remember a ten pack of disks at the store costing somewhere around $20, giving you a whopping 4mb of storage space. Today I can go to Wally world and buy a 32gb micro SD card-which has approximately 84,000 times the storage space as that old 10 pack of disks- for under $10. Wow…….

Of course, I have some serious reservation on how we are deploying all of this computing storage and power (like all of the creepy tracking stuff), but that is a topic that I could go on for hours pondering about…….

Ah, the luxury of a Mac 128K with MacWrite and ImageWriter. Sometime about 1986 or so, when I was in high school, some teachers did not allow their students to use a word processor! It was considered an unfair advantage over the majority of kids who did not have a computer at home. With MacWrite you could easily make edits to your rough draft and print a new copy, at a time when most students had to laboriously rewrite or retype their entire paper to turn their rough draft into a final version. Eventually the school got enough computers for public use that this restriction was lifted, but it’s interesting to remember the days when a word processor was considered inequitable or even a form of cheating.