Archive for November, 2021

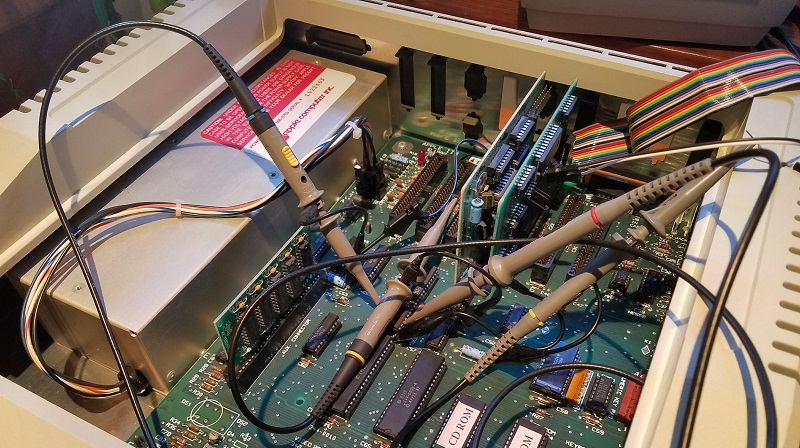

Yellowstone Beta Testing Continues

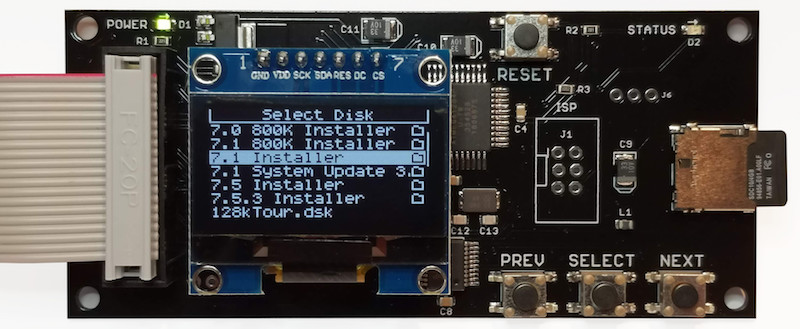

The goal of a “do everything” disk controller for Apple II is getting closer to reality, thanks to the efforts of the hard-working beta testers. If all goes well, I’m hoping to have Yellowstone cards available for sale in the first months of the new year. Yellowstone is designed to support virtually every type of Apple 5.25 inch, 3.5 inch, or smart disk drive that exists, including Macintosh drives running on the Apple II, and the results from the beta testers have been very encouraging so far. But it’s not all perfect. Here are a few of the issues that beta testing has uncovered.

Unidisk 5.25 and Disk IIc Problems at Drive 2

The Unidisk 5.25 and the Disk IIc work fine when connected as Drive 1, but in some situations they appear to interfere with Drive 1 if they’re connected as Drive 2. I don’t have the hardware needed to troubleshoot this one directly, but I should be receiving a Unidisk 5.25 next week. My best guess is that the outputs on these drives have a different type of driver circuit than other drives, which causes the tri-state signals to take longer than normal to get pulled to a logical “1” voltage by Yellowstone’s pull-up resistors. So their “0” outputs effectively overlap with Drive 1’s. Or it may be something completely different.

Third-Party 3.5 Inch Drive Problems

All of the Apple-brand 3.5 inch drives are working, including the Apple 3.5, Unidisk 3.5, Apple SuperDrive, and various Macintosh 3.5 inch drives. But third-party drives from Applied Engineering, Dataspace, Ehman, and Laser only work sporadically or don’t work at all. The Applied Engineering 3.5 drive is supposed to work with a stock Apple IIgs, but the other three reportedly don’t work with any stock Apple computer or disk controller, but do work with the original UDC disk controller. (It seems like that would have greatly limited their sales appeal.) I have a Laser 3.5 for testing, and I should be receiving an Applied Engineering 3.5 drive next week.

Obscure Crash on Apple IIgs

If your IIgs is configured for 80 column mode, and you use one of the several methods to enable Yellowstone’s Disk II mode immediately from power-on, it will crash. This doesn’t happen after a warm start or reboot, only directly from power-on. It also doesn’t happen in 40 column mode. I suspect my code that prints “YELLOWSTONE DISK II MODE” is doing something bad in 80 column mode. It just calls the HOME and COUT routines in the Apple II ROM. I haven’t looked into this further yet.

3.5 Inch Disk I/O Speed

Depending on what software you’re running and what you’re doing with it, 3.5 inch disk I/O through Yellowstone is anywhere from a little to a lot slower than 3.5 inch disk I/O with the same drive on the Apple IIgs built-in disk port. 5.25 inch disk I/O and smart disk I/O (like the Unidisk 3.5 and Floppy Emu Smartport Hard Disk) are about the same speed as the Apple IIgs built-in disk port. I’m not sure why 3.5 inch I/O is behaving differently, but it may be some combination of sector interleave and code optimization.

Possible Disk Corruption with VidHD

One tester reported that a specific Apple IIgs hardware configuration including VidHD would corrupt 3.5 inch disks during power-up. When VidHD was removed, the problem disappeared. I think this is actually a VidHD problem and not Yellowstone. There was a long discussion on Facebook’s Apple II group earlier this year about people experiencing disk corruption when using VidHD. Initial reports were that the latest firmware fixed it, but that was later called into question.

Possible Problems with TransWarp Accelerator

One tester reported problems when running Yellowstone on a specific unenhanced Apple IIe system with a TransWarp accelerator. The problem didn’t appear with the TW on a different computer, and couldn’t be confirmed by the only other tester with a TransWarp. For now it’s unclear if this is a Yellowstone problem or something else.

Cable Routing Difficulties

A few testers reported that the disk ribbon cables were difficult to route through the opening in the rear of the computer, or that the cables pushed against the card in the neighboring slot. I’ve experimented a bit with using right-angle connectors and some other ideas, but there’s probably nothing much I can do without changing the entire board layout. I think the testers experienced this frustration more often than a typical user will, because the testers are constantly plugging and unplugging different drives in order to verify them.

There are a few more open issues beyond these, but most of them are hopefully at the level of errata, and don’t need to delay production of the final Yellowstone cards. Any Yellowstone firmware bugs that are discovered after launch can be fixed with a firmware update, which can be done in-system on the Apple II computer without any special programming tools.

Be the first to comment!Cyber Monday 10% Off at the New BMOW Store

Help celebrate the opening of the new BMOW store with a Cyber Monday 10% off sale! You get a discount, I get more people trying the new store platform, and everybody wins. From now until the end of Monday, all BMOW products in the new store will be 10% off the regular price. This sale ends at 11:59 PM Pacific time (UTC-8) on Monday night.

You can visit the new BMOW store at shop.bigmessowires.com, and enter the discount code cyberbmow21 at checkout to get 10% off. This isn’t yet the default store, and most internal and external links still point to the original store at www.bigmessowires.com/shop. Purchases from the original store aren’t eligible for this sale – I need as many people as possible to exercise the new store to confirm it’s running smoothly, before I can deactivate the original store. Thank you for trying out the new store, and happy shopping!

Read 2 comments and join the conversationBMOW Store Gets a Major Remodeling

Just in time for the holiday shopping season, the BMOW store has been completely rebuilt using an entirely new technology platform! This brings an armload of major improvements, including new payment and shipping options, the restoration of Australia and New Zealand shipping, and overall much improved features and stability. This is something that’s been on my to-do list for years, but I kept putting it off, as there was always something more urgent. My procrastination continued until the old store mysteriously imploded on the night before Thanksgiving, leaving BMOW unable to process any new orders. Ouch.

The Store Died, Sorry

Some time on November 23rd or 24th, the old store’s payment processing quietly stopped working. There were no errors or other problem indications, except a mysterious lack of new orders and a large number of abandoned shopping carts. I didn’t notice anything was wrong until late night just before Thanksgiving as I was making pie. I found that customers could begin the checkout process, enter their payment details, and see the final order review page. But when they clicked the button to submit their order, it redirected them to a blank page. No visible error, nothing in the logs, but no payment was made, no order was submitted.

Unfortunately there was no one I could ask for help; I needed to solve the problem myself. I’ve done it before when something unexpectedly broke, sifting through logs, updating plugins, patching PHP files, changing security settings, or increasing server memory limits and timeouts. The old BMOW store is an awkward collection of cheap and free components, including a generic web hosting plan (Dreamhost), content management software (WordPress), e-commerce software (Woocommerce), a variety of different WordPress and Woocommerce plugins, and a payment gateway plugin (PayPal Express Checkout, from the PayPal for Woocommerce plugin). This system frequently breaks at unexpected times, when one of these components gets automatically updated, or a database table grows too large, or an external dependency changes. But I’m responsible for keeping it all working, and that’s not really a job I want to have.

I sunk many hours into troubleshooting this problem unsuccessfully. The logs didn’t reveal any errors or obvious failures. Dreamhost couldn’t help, beyond suggesting that I update everything to the latest versions. This is a double-edged sword in the land of WordPress, because updates are often needed to fix security issues or other problems, but they’re also the most common source of problems. When you’re relying on a dozen different components, and you update one of them, the others might not be happy.

After a frantic late night, I finally managed to fix the problem temporarily on Thanksgiving morning, by eliminating the order review step entirely. But it was now glaringly clear how much risk I was facing by depending on this fragile technology stack with nobody to maintain it but me.

Problems With The Old Store

Even if the old store had continued working OK, it just wasn’t great for customers or for me and Lea. On the customer side, it was very slow and sluggish. Submitting an order would sometimes lead to a 500 internal server error, or a payment made but no order recorded, or two orders for a single payment. Something about shipping options didn’t work correctly, so I was forced to limit customers to a single shipping method instead of offering a choice. The order confirmation and shipping confirmation emails sometimes didn’t work.

And then there was PayPal. The old store only offered a single payment processor, PayPal, which some customers simply hate and refuse to use. PayPal used to offer an option for credit card payments without creating a PayPal account if you didn’t want to, but that seems to have disappeared and now a PayPal account is required for making any kind of payment. Another drawback was the lack of payment integration – customers needed to leave the BMOW site in order to enter payment info at the PayPal site, before returning to the BMOW site for final order review.

PayPal also made it strangely difficult to edit the shipping address. If the customer already had a PayPal account, it would auto-populate the shipping address with their saved address, with no option to change it except by cancelling the order in progress and visiting PayPal preferences. Moved recently, or shipping to an alternate address? Too bad. PayPal’s address validation was also weirdly broken. I’ve had countless orders where people omitted required parts of their shipping address like the building number, city name, or state/territory name.

On the back end, searching for and updating customers’ order records was slow and awkward. Some apparently basic features were not included, like embedded the tracking number into the shipment confirmation email. I had to implement that myself with some custom coding. And while this may not be a fair criticism, the old store simply took orders but offered nothing towards managing shipping. I used a separate third-party shipping solution (Shippo), with a custom-made C# tool to gather the Woocommerce data, process it as needed, generate packing slips and invoices, and purchase and print the postage labels from Shippo.

Hello Shopify!

Shopify is a very popular solution for running e-commerce stores, and I’d already made up my mind long ago to try Shopify when I was ready to update the store. Shopify replaces the entire technology stack of the old store: Dreamhost, WordPress, Woocommerce, plugins, PayPal, and Shippo. It’s a cloud-based solution, so Shopify actually runs your store on their servers, using DNS magic to make it appear under your domain name as part of your regular web site.

Unfortunately Shopify isn’t exactly cheap, at $79/month for the plan I would probably need. But after all the pains experienced with the old store, I decided the money was well worth it. And now that I’ve dug into Shopify’s details further, I think the net cost may be much less than $79/month, thanks to lower fees for credit card processing and shipping than I was paying before. In fact, I may actually see a net savings from switching. Wow!

I was able to create and configure the new Shopify store in one very long day. So far I’m extremely pleased and impressed with what I’ve seen. Everywhere I look, from the theme designs to the order processing and back-end reporting, I find great new features that are a huge step up from what the old store provided. I should have made this switch long ago.

What’s New

The new store looks completely different from the old one, but the customer-facing changes are more than just a facelift. Here’s a tour of what’s new:

New Payment Options – The store uses Shopify Payments, which seems to be a rebranded Stripe. It allows for a dozen different payment methods, including credit cards and others like Apple Pay. It’s all directly integrated into the store, with no separate account needed. Basically it just works. GOODBYE PAYPAL! I will not miss you at all. But PayPal is still an option for anyone who wants it.

New USA Shipping Methods – For customers in the USA, instead of one-size-fits-all shipping, you now have a choice of Standard (3-4 day) or Economy (5-8) day shipping. For the moment these correspond to USPS Priority and USPS First Class Mail, but that may change in the future. If you want to save a few bucks on shipping and are willing to wait slightly longer, now you have that choice.

Free USA Shipping – Orders over $150 to destinations in the USA will now ship free. Hooray!

New International Shipping Methods – Worldwide DHL and UPS shipping is here! This is a big change, and I’m excited and slightly nervous. These new shipping methods aren’t cheap, at around $50 for most destination countries, but they should be faster and much more reliable. Higher tiers of USPS international service are now available too, including Priority Mail International and Priority Mail Express International. USPS First Class Package International Service remains an option too, and is the lowest cost international shipping method. FCPIS is sometimes great, and you can typically get a package delivered in a week to destinations like Canada, France, or UK. But sometimes FCPIS experiences wild delays with months passing in shipping purgatory without any updates to the tracking info. This is very frustrating for both customers and for me.

I hesitate to call any of these new international shipping methods “express shipping”, because there’s still a few days of order processing time before BMOW can fulfill your order, no matter how fast the actual shipping may be. Our fabulous office specialist Lea normally works three days per week, so the processing time is 1-2 business days in most situations.

The shipping fee for international orders is also now calculated based on the actual carrier cost, plus a small handling fee for the extra work that these orders involve. This is an improvement over the old pseudo-flat-rate pricing scheme for international shipping, which lumped everything into a few broad categories based on weight tiers and geographic region.

Australia and New Zealand Shipping – US postal service FCPIS shipments to Australia and New Zealand have been suspended for the past two months, due to some unspecified “carrier disruptions” related to COVID-19 and global supply chain problems. The introduction of new shipping methods means that BMOW can once again serve customers in those countries. Woo-hoo! Australia Post’s ShopMate USA service also remains an option for customers looking for the cheapest Australian shipping, although the service will reportedly be phased out next year. We hope that FCPIS service to these countries will become available again soon, but until then, these are good alternatives.

Better Tracking – Improved package tracking and notifications are one of the many other improvements made possible by the new store. For international shipments, DHL and UPS will provide much more timely and detailed tracking information than FCPIS. And for customers everywhere, you’ll get email notifications not just when your package is shipped, but also when it’s out for delivery. There’s even an option to get notifications on your phone by text message.

Tour The New Store Today!

The new BMOW store is live and taking orders now at shop.bigmessowires.com. Give it a try! For the moment, both the old store and new store are running and accepting orders, so I can monitor what’s happening at the new store and ensure everything goes smoothly. All the store links are still pointing to the old store, so you’ll need to enter the URL directly (or click the link in this paragraph) to visit the new store. Eventually I’ll retire the old store and set up redirection links for each of the product pages to the new store. If you try the new store, please let me know how the experience goes. What are your thoughts on the visual design and page layout? Any issues with order processing or payments? Thanks for your feedback!

Read 6 comments and join the conversationLogic Analyzer Shopping

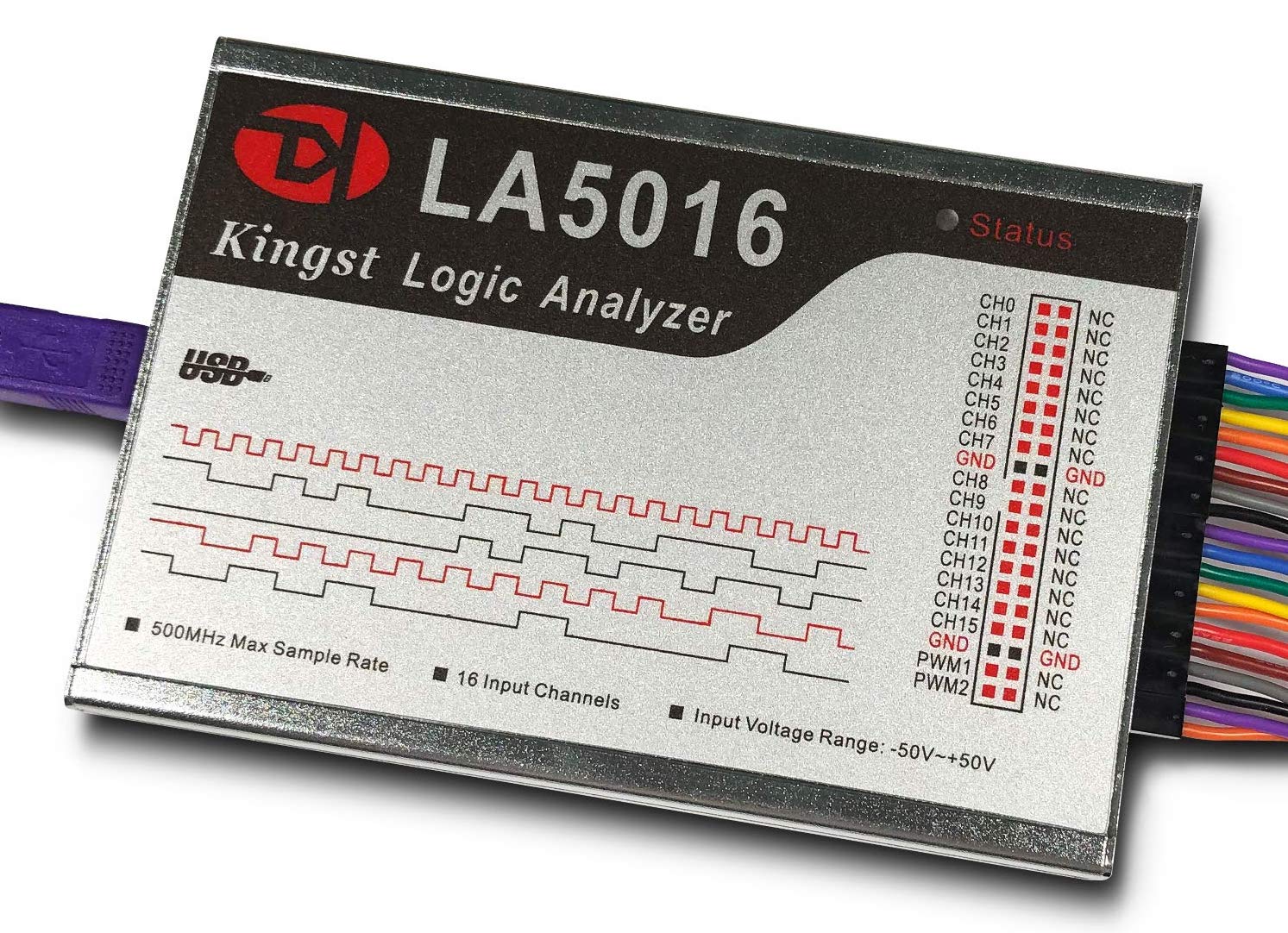

When I first got involved with electronics, I assumed that my most important tool would be an oscilloscope. I bought a Rigol DS1074Z (mini review here), a nice four channel 70 MHz scope, but I’ve rarely used it during the years since then. I’ve discovered that for the type of work I do, the can’t-live-without tool isn’t an oscilloscope but a logic analyzer. I can’t imagine debugging any of my projects without one. I already have three of them, but now I’m shopping for a fourth.

Important features for comparing logic analyzers:

Number of Channels – More channels is better. My Saleae Logic Pro 8 is very nice, but I often need to capture more than eight signals at once. 16 channels is a good number that covers most of my real-world use. 32 channels would be great.

Sample Rate – A faster sampling rate is usually better, but the details are important. In theory 100 megasamples per second would be enough to capture a 50 MHz signal, with one sample for the high part of each period and one sample for the low part. But if you want to capture signals with a non-symmetric duty cycle, or you want better accuracy on where the signal’s rising and falling edges are, it’s better to aim for at least 4x or 5x oversampling. 100 MS/s might do an adequate job of capturing a 20 MHz signal.

Beware that all logical analyzers advertise their maximum possible sampling rate, which is almost always limited to a small number of channels or one channel. The maximum sampling rate when recording all channels will be lower. For example the DSLogic U3Pro16 supports 1 GS/s in streaming mode when recording 3 channels, but only 125 MS/s when recording all 16 channels.

Bandwidth – Just like an oscilloscope, a logic analyzer has a maximum bandwidth that’s determined by the analog properties of the test leads and the internal circuitry. The sample rate may be 1 GS/s, but if the input bandwidth is only 50 MHz then any faster signals will appear blurred no matter how high the sample rate may be. Some logic analyzers list a bandwidth number in their spec sheets, but many cheaper ones don’t.

A reality check is also in order here. Even if the LA has a very high sample rate and correspondingly high bandwidth, you can’t necessarily capture high speed signals with it. When you connect a test lead to your circuit, it will change the behavior of the circuit in ways that may interfere with your measurements, or cause the circuit to stop working entirely. It will add more load to the signal, add additional capacitance, and possibly add signal reflections too. Fast signals are more likely to be negatively impacted by these problems. When professionals debug very high speed designs, I think they generally don’t even use logic analyzers, and instead rely on simulations or on signal capture features that are built-in to the hardware.

Sample Depth – A greater sample depth allows for longer duration recordings. With a long enough recording, you don’t need to worry very much about setting up complex triggers, because you can just record many seconds worth of activity and then scroll through the recording to find points of interest. Given a fixed sample depth, the recording duration must also be shorter when the sampling rate or number of channels are increased.

Many logic analyzers use data compression to store more samples than would otherwise be possible. Usually it’s a simple run-length encoding. This means the effective recording duration can be hard to predict exactly, because it depends on the compressibility of the signal data.

Sampling Method – Some logic analyzers use an internal memory buffer for storing samples, and then transfer all the samples to the computer once the recording is finished. This technique usually allows for high sampling rates, at the expense of lower sample depths. Buffer sizes from 64 Mbit to 2 Gbit are typical. Other logic analyzers stream the samples to the computer on-the-fly, as they’re being recorded. This allows for very long duration recordings that are only limited by the amount of RAM in the host computer, but the sample rate is usually lower, and is limited by the maximum possible USB transfer rate. Streaming logic analyzers with a USB 3 connection will support faster sampling rates than those with a USB 2 connection.

Input Circuitry – For the best signal integrity, each test lead should be a shielded wire with independent signal and ground connectors. An unshielded signal wire and a shared ground connector may be OK for sampling slower signals. The input impedance and capacitance are also important to know. A high impedance and low capacitance are desirable, and will reduce the chances that connecting the LA’s test leads will disturb your circuit. For example the Saleae Logic Pro 16 has a 2 megaohm input impedance and 10 pF capacitance, while the Innomaker LA5016 has 220 kOhm impedance and 12 pF capacitance. Some cheaper logic analyzers don’t even specify these numbers, which is a clue that they’re probably not good numbers. Other input specs to watch for are the minimum and maximum safe input voltages, input protection, and selectable thresholds for logic levels.

Analog Channels and Other Features – One extra feature that’s really handy is analog recording. It’s not going to replace your oscilloscope, but sometimes it’s handy to have a low-bandwidth analog view of your signals that’s integrated with the digital view. The Saleae Logic Pro series offers analog recording on all channels at 50 MS/s and 5 MHz max bandwidth. Initially I thought that was a silly feature, but I’ve found that it’s often helpful for troubleshooting signal contention or logic level problems. Another extra that may be useful is an external clock input, to synchronize samples with a microprocessor clock or other synchronous device. I used to think this was a very important feature, even though many logic analyzers omit it. But in truth, over many years of development I have almost never used it. Some logic analyzers also offer features like programmable digital outputs and other goodies. Whether these are valuable to you depends on your application.

Software – Great hardware isn’t very useful unless it’s coupled with great software for controlling the device and analyzing the recorded sample data. Ideally you want to see lots of protocol analyzers for automatically decoding and annotating sample data for common standards like SPI and I2C. It’s also helpful to have robust trigger support, with multi-stage triggers and triggers based on protocol analyzer results. The software should also make it easy to view, zoom, and measure the recorded sample data.

Price – Logic analyzers can range in prince from about $10 to $1000 or more. The $10 devices can be perfectly adequate for many uses. Fancier equipment may offer many more features, but is it worth the extra cost?

At BMOW Labs

I currently alternate between two different logic analyzers: a Saleae Logic Pro 8, and a no-name 16 channel device. Both are streaming logic analyzers. I think the no-name device is a clone of the Logic 16 (not to be confused with the much more capable Logic Pro 16). Each of these logic analyzers is useful in different situations. The Logic Pro 8 supports fast sample rates around 100 MS/s or better with all channels, and supports analog recording too. But eight channels is limiting, and one of the channels is broken, so the 7-channel device is often not enough.

The no-name device has 16 channels, but no analog, and the sample rate stinks. In theory it supports 100 MS/s with 3 channels, or 16 MS/s with all 16 channels. But in practice I can never get anywhere near those rates before the software gives me an error saying it can’t keep up with this data rate. With 12 or 16 channels, I usually can’t get any better than about 2-3 MS/s before running into data rate errors. I’m not sure if this is because I’ve saturated the USB 2 connection, or because my reasonably-powerful computer can’t keep up, or something else. I’m very curious to know if other people with similar no-name 16 channel logic analyzers have the same experience. The device I have is similar to this one. My back of the envelope math says USB 2 supports 480 Mbps, divided by 16 channels should allow for 30 Mbps per channel, so even when allowing for USB overhead I should be seeing much better performance than I’m getting.

So I’m searching for a logic analyzer with 16 channels, supporting sample rates of least 50-100 MS/s when all channels are used simultaneously, ideally with analog capability, and with decent software. Here are some options I identified:

Some links below may be affiliate links. BMOW may get paid if you buy something or take an action after clicking one of these.

| Saleae Logic Pro 16 | Innomaker LA2016 | Innomaker LA5016 | DSLogic Plus | DSLogic U3Pro16 | |

| Sampling Method | streaming | buffered | buffered | buffered, streaming | buffered, streaming |

| Sample Rate with 16 channels | 100 MS/s | 200 MS/s | 500 MS/s | 100 MS/s, 20 MS/s | 500 MS/s, 125 MS/s |

| Bandwidth | 100 MHz | 40 MHz | 80 MHz | ?? | ?? |

| Min Pulse Width | ?? | 12.5 ns | 6.25 ns | 5 ns | 2 ns |

| Sample Depth per Channel (uncompressed) | unlimited | 50 MS | 100 MS | 16 MS, 16 GS | 128 MS, 16 GS |

| Test Leads | unshielded, independent grounds |

unshielded, common ground |

unshielded, common ground |

shielded, independent ground |

shielded, independent ground |

| Input Impedance | 2 Megohm 10 pF | 220 kOhm 12 pF | 220 kOhm 12 pF | 250 kOhm 13 pF | 250 kOhm 13 pF |

| Voltage Range | -25V to +25V | -50V to +50V | -50V to +50V | -30V to +30V | -30V to +30V |

| Other | analog on all channels | 2 PWM outputs | 2 PWM outputs | external clock input | external clock input |

| Software | simple triggers | simple triggers | simple triggers | complex triggers | complex triggers |

| Price | $1000 | $200 | $300 | $150 | $300 |

It’s tough to pick any clear winners here, but the Logic Pro 16 seems to occupy a category by itself. It’s the only all-streaming device, supporting unlimited sample depths, which is great so long as you don’t encounter unexplained data rate errors. It’s the only device with analog capability, which I’ve found to be a surprisingly useful feature. Its input impedance and bandwidth are the best of this bunch. Saleae’s software and support are have a good reputation, and the overall hardware build quality is very good. The only real drawbacks are somewhat lower maximum sample rate with 16 channels, weaker trigger options, and a much higher price than the alternatives.

The Innokmaker devices are popular and earn mostly good reviews for the hardware and software. They offer options with different sample rates and sample depths at different prices, so you can choose the one that works best for you. I have some reservations about the sample depth. With 50 megasamples per channel (uncompressed) on the LA2016, 200 MS/s recording would fill up the buffer in just 250 ms. That’s definitely too short to accommodate the way I’m accustomed to working. If compression could stretch this to a few seconds, it might be enough, but that’s something that can’t really be evaluated until you try it. The LA5016 offers twice the sample depth, but it’s still not a lot.

The DSLogic devices can operate in buffered or streaming mode, and may have a leg up over the Innomaker competition. In buffered mode, their hardware specs are roughly similar to the Innomaker devices, with the specs appearing to scale by price in a reasonable way. But they also offer a streaming mode option, to achieve modestly longer duration recordings. For unknown reasons, the streaming mode doesn’t provide unlimited sample depth like the Saleae, but is limited to a total maximum depth of 16 GS per channel. While not unlimited, that’s enough for very long captures even at the highest sample rates. DSLogic also provides shielded test leads with independent grounds, and supports more complex triggers than some of the other devices here.

One knock against DSLogic concerns the company rather than the devices themselves. Their DSView software is based on the sigrok open source project, and early versions of the software reportedly used the sigrok code without attribution and without making the derived code public. This has now been resolved, but there’s a lingering sense that DSLogic is not playing fair and there’s some friction between them and the sigrok developers.

BMOW’s Choice

For my own purposes, I think I can limit the options to the Saleae Logic Pro 16 and the DSLogic U3Pro16. The LA2016 and DSLogic Pro are good values for the price, but I think I’d prefer to pay a little more for faster sample rates and greater sample depths. The LA5016 is very similar to U3Pro16, and they’re the same price, but the U3Pro16 is slightly better in nearly every category. That leaves just two contenders for the prize.

Do I want to spend more than 3x as much for the Saleae to get analog capability and a better corporate reputation? The DSLogic has arguably better test leads, and better sampling rates in both streaming and buffered modes, although its input circuitry is probably worse. It’s a tough call. If I were spending somebody else’s money, I would probably chose the Saleae. But when it’s my own dollar, the magnitude of the price difference is hard to swallow.

Read 9 comments and join the conversationThe Amazing Disk II Controller Card

In the world of Apple II disks, there are two major types of disk controller cards: the original Disk II controller (and clones), and everything else. Both have their place. The “everything else” category includes the Apple 3.5 disk controller card, Liron card, SCSI cards, IDE cards, and more. These cards provide a standard API for software to read/write blocks, get drive status, and format the disk, all without requiring the software to know anything about how the disk actually works. These cards have built-in smarts to handle the low-level details. In contrast the original Disk II controller card is dumb as dirt, and forces the software to handle virtually all of the low-level details. And yet it’s an amazing piece of technology for its time.

The first Apple II models had no built-in floppy disk support. The Disk II controller was cleverly designed to add that missing support at a very low cost, and was a major reason why Apple II computers became so popular. This disk controller was simpler, and cheaper, and more flexible, and just all-around better than any of its contemporary competition. It’s the ultimate example of Woz can-do technology.

Floppy Disk 101

A floppy disk is just a plastic circle with a magnetic coating. Loaded into a drive, the disk rotates at about 300 RPM. A stepper motor moves the read/write head linearly from the center of the disk to the outer rim. This arrangement provides for a few dozen concentric rings where a serial stream of 1s and 0s can be stored.

How do you get from these basics to higher-level concepts like bytes, tracks, and sectors? How are logical data bytes encoded into bit patterns on the disk? When reading the disk, how are the bits framed into bytes? How do you find track zero, or the boundaries between sectors? The conventional answer to these questions in the 1970s was extra hardware, and lots of it. This made the disk controllers and the drives themselves complex and expensive, putting them mostly out-of-reach for an inexpensive home computer system.

It was late 1977 when Apple set its sights on finding an alternative to cassette tape data storage, and began looking into options for a floppy drive for the Apple II. They were still a small and unproven company, and the Apple II had only been available for about six months. Woz didn’t know much about the subject of floppy disks, but he agreed to take on the challenge.

Woz’s approach was to remove virtually all of the hardware that controls the disk, and take a software-driven approach akin to bit-banging. Apple went to Shugart, the inventors of the 5.25 inch floppy drive, and requested a stripped-down version of Shugart’s SA400 drive with most of the control electronics removed. It was just a simple mechanism with one motor for spinning the disk and a stepper motor for moving the read/write head. As the legend goes, the entire Disk II hardware design was conceived and built by Woz and Randy Wigginton over a few weeks during Christmas vacation 1977, including writing the first version of DOS, and the working disk drive was demoed at CES in January 1978. Additional help was provided by Apple engineers Cliff Huston and Wendell Sander. 40+ years later I’m amazed by how quickly this small team was able to make everything work.

A few years ago I asked Woz about the Disk II development, and he said this:

I have no idea how I came up with that incredible disk controller. I was good at creating anything in electronics, analog or digital. I had no prior experience of any kind, not even in classes, regarding disk hardware or software. So my thinking had to be from the ground up. I had to sense data coming from the disk and make decisions about 0’s and 1’s based on timing.

I had taken a graduate level course at Berkeley (although an undergrad, I only took grad courses in anything having to do with computers in any university) on state machines and thought of how I could use 2 simple low cost chips as a state machine to do this, sort of a minimal microprocessor hand-built. At the time I just knew that it would read and write data but I assumed that I was leaving out many ingredients of a disk controller due to not knowing what they did. I assumed this because my design took so few parts. But in the end, mine did more in some good ways, especially since it was in the computer and tied to software that could alter how it worked, which eventually led to greater storage and faster speed that would not have been possible using the normal disk. Plus, I took about 20 chips off the drive itself and bypassed them from my own controller, because they were just middlemen that got in the way of things.

The best work I did, over and over, was partly due to not having money and having to learn how to use the fewest parts of anyone, and also due to the fact that everything great I created I had never done before.

A Tour Of The Disk II Controller

The Disk II controller card is basically just a fancy shift register. It knows how to read and write bits at a fixed rate of 1 bit every 4 microseconds. The card also has a tiny 256 byte ROM containing bootstrap code that runs when the computer first turns on. It’s a minimal 6502 program with just enough smarts to locate track 0, sector 0, load it into the computer’s memory, and then execute it. Every other aspect of disk control is handled by software.

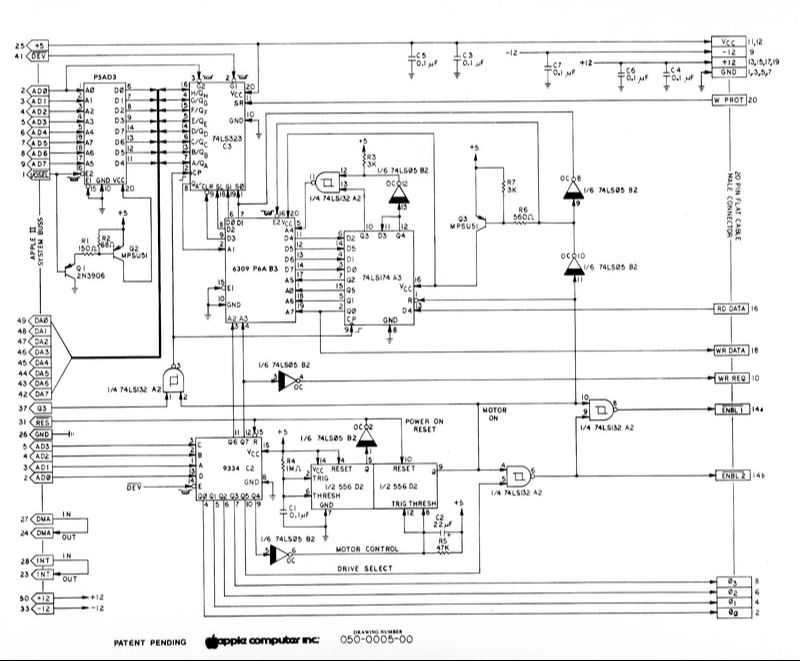

The card contains only eight simple chips. There’s a 256 byte ROM containing the bootstrap code, and a second 256 byte ROM used as part of a state machine (more on this in a moment). There’s also a 74LS174 hex flip flop providing the inputs for the state machine. A 74LS323 eight bit shift register is the heart of the whole design. A 74LS259 addressable latch stores the desired state of the motors and the drive 1 or 2 selection. There’s a 556 dual timer, and a 74LS05 hex inverter and 74LS132 quad NAND to provide some needed glue logic. That’s it. That’s the entire disk controller. Here’s the schematic:

Let’s go through the challenges of floppy disk I/O one at a time, and look at how the Disk II controller design solved them.

Challenge #1: byte framing. The data coming from the disk is a continuous stream of 1s and 0s, and there are no start or stop bits. So how do you know where one byte ends and the next byte begins? Woz’s solution was to require that every byte written to the disk have 1 as the most significant bit. During a disk read, the state machine takes bits from the disk one at a time, moving the shift register one position left and appending the new bit at the right. It keeps going until the left-most bit position holds a 1, at which point the state machine says “Aha! Here is a complete byte!” Then the CPU stores the byte, and the process begins again. The state machine clears the shift register after the MSB becomes 1, so it’s ready to shift in the eight bits for the next byte.

By itself this solution isn’t enough. If the state machine starts reading bits in what was actually the middle of a byte, it will probably misinterpret a 1 bit in the middle of the byte as being the 1 bit for the MSB position. But this scheme ensures that if the state machine gets the byte framing correct just once, whether by luck or another method, it will continue to be correct from then on. So the challenge is finding a way to guarantee the framing is correct before beginning to read disk data.

The conventional solution is to write a special 50-bit pattern of so-called sync bytes to the disk, immediately before each sector. These aren’t really bytes at all, but a 10-bit pattern 1111111100 repeated five times. This pattern has the interesting property that no matter where the byte framing is initially, it will fall into correct synchronization after at most five repetitions of the pattern, just by following the state machine rules described previously. This solution is entirely software-driven, and is merely a convention. The hardware itself has no mechanism to guarantee correct byte framing. There are other methods of ensuring framing, and some of the bizarre richness of Apple II copy-protection schemes arises from different approaches to framing taken by the custom I/O routines in many games.

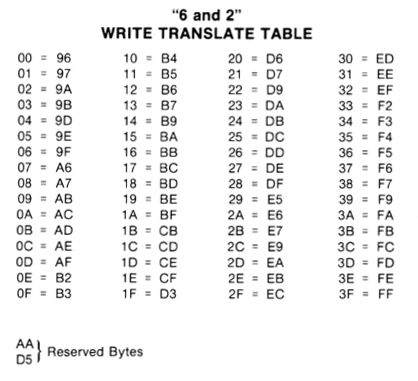

Challenge #2: byte encoding. If every byte written to disk must have 1 as the MSB, then how do you write a zero byte, or any other byte with a value less than 128? And there are other restrictions too: every byte written to disk must have no more than two consecutive zero bits. If there are three consecutive zero bits, the disk hardware can’t reliably read back the data. Given these two requirements, there are only 66 possible 8-bit values that are permitted to be written to the disk. How then can arbitrary 8-bit values be stored?

The answer is to split up the logical 8-bit bytes, and store their bits in subgroups as part of multiple disk bytes. The standard way of doing this is a GCR encoding scheme called 6-and-2. With 66 possible values for the disk byte, and two reserved values, that leaves 64 possible disk bytes for encoding data. 64 is 2 to the 6th power, so six logical bits can be encoded in every disk byte. A series of three disk bytes can encode the first six bits of three logical bytes, and a final fourth disk byte can encode the last two bits of the three logical bytes, concatenated together. This means the number of bytes stored on disk is 4/3 times the number of logical bytes, ignoring headers and checksums and padding.

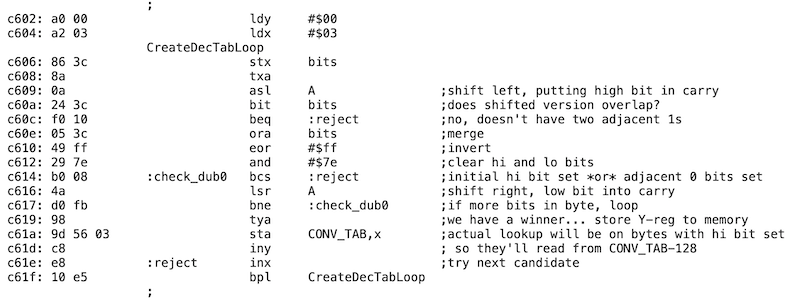

You might wonder how the Disk II controller bootstrap code accomplishes the GCR decoding for sector 0, track 0. At first glance, it would seem to require storing a 64-entry reverse lookup table in ROM, which is already one quarter of the very limited ROM space available. The bootstrap code actually uses a much cleverer solution, and constructs a 256-entry forward lookup table in RAM on the fly, using only 30 bytes of 6502 code!

The Apple II floppy byte encoding has evolved over time, resulting in a changing number of sectors and total disk capacity. The first version of the Disk II controller card didn’t permit any consecutive zeroes to be written to the disk. This further limited the number of possible disk bytes, and forced the use of a less efficient 5-and-3 encoding scheme. It was only possible to fit 13 sectors per track, resulting in 114 KB total disk capacity. Apple DOS 3.1 and 3.2 used the 5-and-3 scheme. Eventually Woz or one of his teammates realized that with a small change to the state machine, it would be possible to read two consecutive zeroes reliably. All it required was a change to the contents of the state machine ROM, essentially fixing a small bug in order to make the bit timing measurements more reliable. No hardware changes were needed to the Disk II controller. The more efficient 6-and-2 scheme was introduced beginning with DOS 3.3, ushering in the 16 sector tracks and 140K disks we’re familiar with now.

As with the byte framing, this whole encoding scheme is purely a software convention. There’s nothing about the hardware that implements 6-and-2 or 5-and-3 or any other encoding method. Sector 0 track 0 must be encoded using 6-and-2, because that’s what the ROM bootstrap code expects, but after that anything goes. Software is free to use any other encoding scheme it wishes, and many copy-protected programs use novel encoding schemes in order to obfuscate their workings.

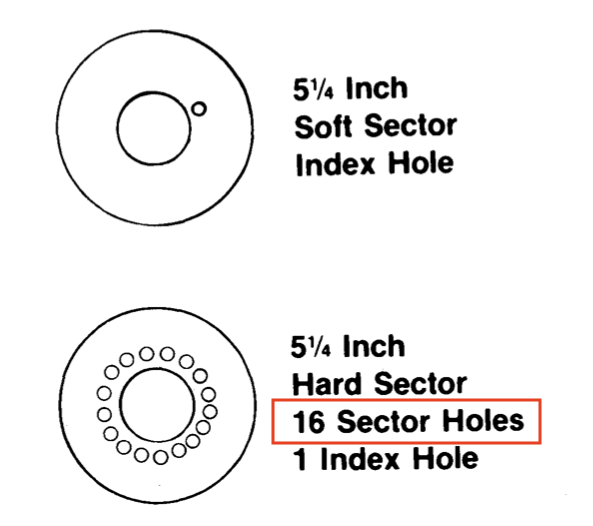

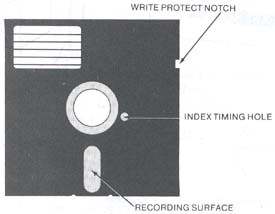

Challenge #3: sectoring. Once you’ve got the bitstream correctly framed into disk bytes, and the disk bytes correctly decoded into logical bytes, how do you make any sense of the data? It’s a ring buffer, so how do you know where the data begins and ends? 1970s floppy disks often used one or more small holes punched in the disk at regular intervals around the circumference. A small opening was cut in the disk’s dust jacket in order to reveal the index holes as they passed underneath. Hardware inside the disk drive sensed when these holes passed by as the disk rotated, and this information was used to determine where a new track or new sector began.

It’s easy to see why this might be undesirable. The hole-sensing hardware adds extra complexity and cost. And in the case of hard-sectored floppies with a hole for every sector, the number of sectors becomes part of the hardware design and can’t be changed. Apple’s move from 13-sector to 16-sector format would have been impossible with hard-sectored disks.

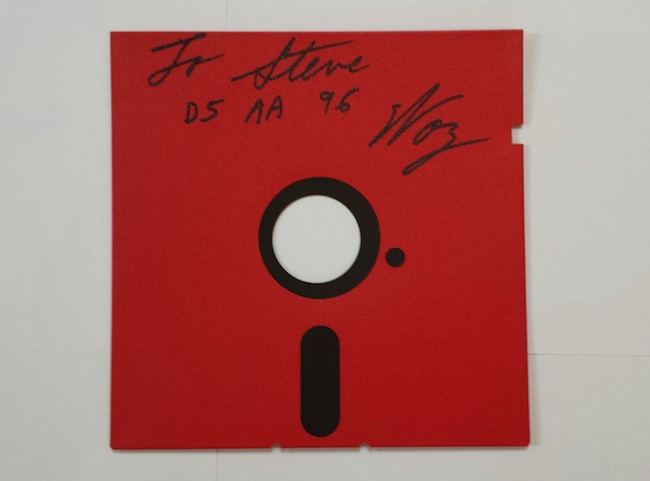

The Disk II design takes a software-driven approach to sectoring. Any index holes on the disk are ignored. When it wants to find a particular sector on the current track, the computer begins reading bytes, ignoring everything it sees until it finds the three-byte sequence D5 AA 96. This signature marks the beginning of a new sector on the disk, and is possibly the most famous byte sequence in the entire kingdom of Apple II arcana. On the wall of my office hangs a 5.25 inch floppy disk with a D5 AA 96 greeting signed by Woz himself:

A short sector header follows this signature, and among other things the header contains the sector number. If it’s the sector number the computer was looking for, then it reads the bytes that follow. If it’s not the right sector, then it keeps looking for another D5 AA 96 to indicate the beginning of the next sector, and tries again.

This whole business is – you guessed it – purely a software convention. The D5 AA 96 signature, the sector header, the length of sectors, and everything else are merely conventions. There’s nothing whatsoever about the Disk II controller card hardware that requires software to work this way, and some software takes a different approach. One well-known example was the game Prince of Persia, which used a custom scheme called RWTS18 that was optimized for reading as opposed to writing, and used six 768-byte sectors per track instead of the standard sixteen 256-byte sectors.

Challenge #4: finding tracks. So far we’ve only discussed data in a single one of the concentric rings on the disk. These rings are usually called tracks, but as we’ll see, the definition of exactly what constitutes a track can sometimes be fuzzy. So how do you switch between tracks, or locate a specific track? And just how many tracks are there? The Disk II and its controller hardware don’t answer these questions. Instead, it’s all (say it with me now) software-driven.

On the disk media there’s no such thing as a track – it’s just a featureless round expanse of magnetic media. Tracks are created when the read/write head remains at a fixed radial position while the disk spins underneath and bits are written. Then the head moves inward or outward to a new radial position, and writes a new track.

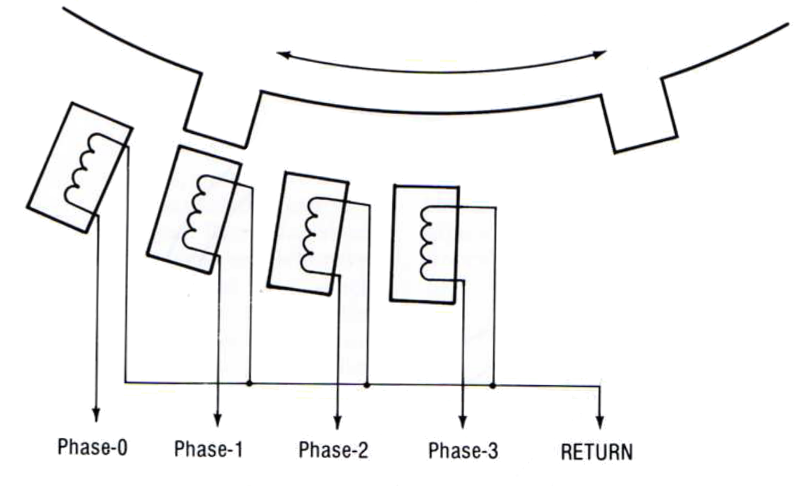

The head movement is controlled by a stepper motor, under direct software control. The stepper consists of four electromagnets, and at any moment the software can turn any of them on or off. A series of permanent magnets are attached to a gear that moves the read/write head, and by activating the electromagnets in the right sequence, they can attract or repel the permanent magnets and move the head. If stepper electromagnet 0 was on, and then electromagnet 1 is turned on and electromagnet 0 turned off, the head will move a small radial distance. Then if electromagnet 2 is turned on and electromagnet 1 turned off, the head will move further in the same direction.

How closely can you space the tracks? It turns out that two of these head movements are normally needed in order to move the head far enough so that a track won’t interfere with its neighbors. If you try to write tracks with only one head movement between them, the magnetized areas of the disk media from the adjacent tracks will bleed into each other and cause a mess. For this reason, two movements are normally considered to be equal to one track, and a single movement is a half track. Quarter tracks are also possible, but aren’t used by most software. If electromagnet 0 was on, and then electromagnet 1 is also turned on, the head will move a quarter track. If electromagnet 0 is then turned off, the head will move an additional quarter track.

The method of locating track 0 is as crude as can be. The disk controller doesn’t know at what track the read/write head is currently located, so software must activate the stepper motors in sequence in order to move the head continuously in the direction of track 0. Eventually the head will reach track 0 and can move no further, but the software will keep activating the stepper motors, driving the head against a mechanical stop and producing the familiar rat-a-tat sound of an Apple II floppy drive during boot-up. After 80 half steps, the head is guaranteed to be at track 0. From that point on the software must keep track of all stepping movements, and remember what track the head is currently on, in order to perform relative steps. If the software gets confused, say by reading what it thinks is track 20 but finding data for a different track there, it will usually recalibrate by repeating the track 0 seek and then immediately stepping back to the desired track. This creates a clack-clack sound that many long-time Apple II users will recognize as the sign of a failing disk.

It’s customary to store 35 tracks per disk, but this is merely a convention. The true limit varies slightly from one drive to the next, and is determined by the maximum and minimum linear positions of the read/write head. Non-standard disks with up to 40 tracks are sometimes seen.

Copy-protected Apple II games very often play funny tricks with track stepping. A simple trick is locating a track at some odd number of half-steps from track 0. Tracks must be at least two half steps apart, but there’s no rule saying they can’t be three half steps apart, so you might find a game disk with data on tracks 0, 1, 2.5 and 4. This will confuse disk copy programs that only expect to find data on integer numbered tracks.

A more advanced trick is writing data on two tracks that are just a half step apart, but only using half the circumference of the disk for each track, so the magnetized areas won’t interfere with each other. There might be a half-track’s worth of data at track 2 from twelve o’clock to six o’clock around the disk, and then another half-track’s worth of data at track 2.5 from six o’clock back around to twelve o’clock. Data that’s written this way is easy to read, but is difficult to write without specially-crafted routines, making disk copies difficult.

Bootstrap Code Disassembly

A disassembly and analysis of the 256-byte bootstrap routine is fascinating. Starting with literally nothing, this code must bang directly on soft switches to control the stepper and read the shift register. It must activate the stepper electromagnets in the right pattern to reach track zero, and then begin reading bytes. It must recognize the D5 AA 96 signature, and check to see if it has the right sector. It must use a GCR decode table which it constructs on-the-fly in order to convert disk bytes. It must perform 6-and-2 decoding to reconstruct three logical bytes from four disk bytes, load the whole sector into a RAM buffer, and then jump to the just-loaded code. And all of this in only 256 bytes!

Woz gets the job done with five bytes to spare, and only about 100 lines of 6502 assembly code. But due to space constraints, some features were omitted. The Disk II controller bootstrap code doesn’t verify the checksum on sector 0, something that was “fixed” in later disk controllers but caused incompatibility with some games. There’s also no error handling, so the bootstrap code will keep trying forever to load sector 0. This explains why an Apple II with Disk II controller card appears to hang during booting if there’s no disk in the drive, or a bad disk, rather than show a nice error message like the Apple IIc or IIgs.

Disk II Controller Hardware Design

This discussion has focused on what the Disk II controller does, without going into much detail about precisely how it does it, and how those eight chips are used. For an excellent and very thorough breakdown I recommend reading Chapter 9 of Understanding the Apple II by Jim Sather. It goes into additional detail about the flux patterns on the disk and much more.

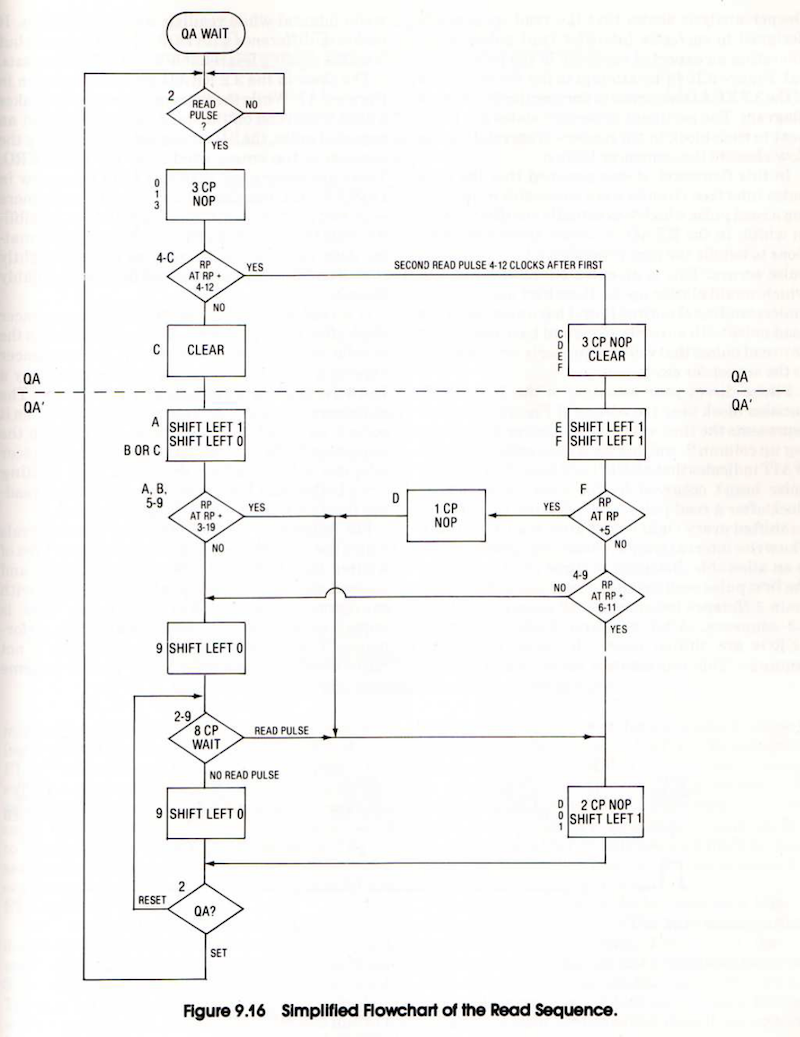

The design of the state machine is quite interesting, and I haven’t seen it described anywhere other than in Sather’s book. Anyone who remembers concepts like Mealy and Moore state machines from a long-ago class will recognize Woz’s work. The state machine ROM is just a simple lookup table. Its inputs are the current state, the read/write mode, the MSB of the shift register, and the next bit coming from the disk. The outputs are the next state and a set of control signals. Depending on the control signals, the state machine may shift the value in the shift register and append a zero or one bit, or clear the shift register, or parallel load the shift register from the data bus.

The state encodings are carefully chosen such that state bits can double as control bits. For example, when writing data to the disk, the value on the write head is also the most significant bit of the current state number. When reading data from the disk, the state sequences are chosen so as to frame pulses corresponding to 1 bits into an appropriate 4 microsecond window, and insert zero bits whenever 1.5 bit windows (6 microseconds) have elapsed without seeing a pulse. For my Yellowstone FPGA-based disk controller I’ve implemented the equivalent functionality in a hundred lines of Verilog; Woz did it with a 256-byte ROM and a hex flip-flop.

Putting It All Together

The words “software-driven” have come up again and again here, and that’s the theme of the Disk II controller. It enables a very flexible design using minimal hardware, but it pushes a tremendous amount of complexity onto the software. Modern software designers might call this bad practice, and would prefer to see that complexity abstracted away behind a standard interface, so the software only has to be concerned with actions like “read block 31”. And that’s exactly how all other Apple II disk controllers work. But in order to work with a Disk II controller, software like DOS 3.3, ProDOS, and most games need to include tons of extra code for manipulating the stepper, locating sectors, performing GCR decoding, and the whole kitchen sink. It’s a little bit crazy, but it works.

If you’ve read this far, you may be interested in the BMOW Floppy Emu disk emulator. Collectors of old Apple II, Macintosh, or Lisa computers will find the Floppy Emu invaluable for running software downloaded from the web, and transferring files between vintage and modern machines. The Floppy Emu stores hundreds of disk images on an SD memory card, and uses custom hardware to mimic many different kinds of Apple floppy disk drives and hard drives. Read more about it here.

During the 10+ years I’ve spent delving into every aspect of Apple’s disk drive designs, it’s been a remarkable journey. Years ago I used to think I understood how it all worked, but today I realize I hardly knew anything at all. Now I believe I’ve got everything mapped out, but of course there are probably still holes in my understanding that I’m blind to. Did I explain anything incorrectly here, or forget to mention something important? Let’s hear about it. Please leave a comment below.

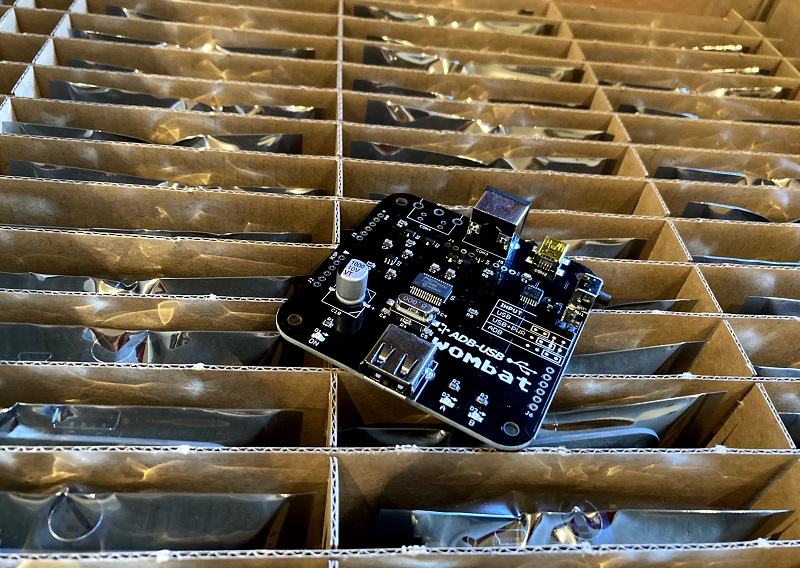

Read 10 comments and join the conversationADB-USB Wombat Restock

The Wombat ADB-USB input converter is now back in stock at the BMOW Store. Thanks for everybody’s patience during the manufacturing delay.

The Wombat is a bidirectional ADB-to-USB and USB-to-ADB converter for keyboards and mice.

- Connect modern USB keyboards and mice to a classic ADB-based Macintosh, Apple IIgs, or NeXT

- Connect legacy ADB input hardware to a USB-based computer running Windows, OSX, or Linux

No special software or drivers are needed – just plug it in and go.

The Wombat was developed by Steve Chamberlin here at Big Mess o’ Wires, and it’s great for breathing new life into your vintage Apple hardware collection.

For more details, please see the product description page.

Be the first to comment!