Archive for June, 2009

More on Memory

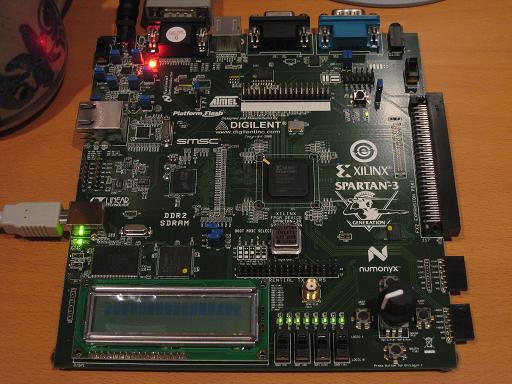

I’ve been working hard the past week on a DDR2 memory controller for the Xilinx starter kit, and refining my estimates for 3d Graphics Thingy’s memory bandwidth requirements. There’s been progress, but it feels like things are moving at a snail’s pace.

I’ve been working hard the past week on a DDR2 memory controller for the Xilinx starter kit, and refining my estimates for 3d Graphics Thingy’s memory bandwidth requirements. There’s been progress, but it feels like things are moving at a snail’s pace.

I wrote earlier about some basic bandwidth estimates, and have revised them somewhat here. Assume pixels and texels are 16 bits (5-6-5 RGB format), z-buffer entries are 24 bits, and the screen resolution is 640×480 @ 60Hz. Let’s also assume a simple case where there’s no alpha blending being performed, and every triangle has one texture applied to it, using point sampling for the texture lookup. For every pixel, every frame, the hardware must:

- Clear the z-buffer, at the start of the frame: 3 bytes

- Clear the frame buffer, at the start of the frame: 2 bytes

- Read the z-buffer, when a new pixel is being drawn: 3 bytes

- Write the z-buffer, if the Z test passes: 3 bytes

- Read the texture data, if the Z test passes: 2 bytes

- Write the frame buffer, if the Z test passes: 2 bytes

- Read the frame buffer, when the display circuit paints the screen: 2 bytes

Assume too that the scene’s depth complexity is 4, meaning the average pixel is covered by 4 triangles, and steps 3-6 will be repeated 4 times. Add everything up, and that’s 47 bytes per pixel, times 640 x 480 is 14.43 MB per frame, times 60 Hz is 866.3 MB/s.

The DDR2 memory on the Xilinx starter kit board has a theoretical maximum bandwidth of 1064 MB/s, so that might just fit. I have serious reservations about my ability to later recreate such a high-speed memory interface on a custom PCB, but ignore that for now. Unfortunately you’ll never get anything close to the theoretical bandwidth in real world usage, unless you’re streaming a huge chunk of data to consecutive memory addreses. Even half the theoretical bandwidth would be doing well. I’ll be conservative and assume I can reach 1/3 of the theoretical bandwidth, which means 355 MB/s. That’s not enough. And I’ll also need some bandwidth for vertex manipulations, since I’ve only considered pixel rasterization, and possibly for CPU operations too. It looks like things will definitely be bandwidth constrained.

Fortunately there are some clever tricks that can be used to save lots of memory bandwidth.

- Z occlusion: When a pixel fails the Z test at step 3, then steps 4-6 can be skipped. With a depth complexity of 4, and assuming randomly-ordered triangles, then on average 1 + 1/2 + 1/3 + 1/4 = 2.08 triangles will pass the Z test and get drawn, not 4. That’s a savings of 14 bytes per pixel, or 258 MB/s!

- Back-face culling: When drawing solid objects, it’s guaranteed that any triangle facing away from the camera will be overdrawn by some other triangle facing towards the camera. These back-face triangles can be ignored completely, skipping steps 3-6 and saving 10 bytes per culled pixel. Assuming half the pixels are part of back-facing triangles, then that’s a savings of 369 MB/s. Of course some of the pixels rejected due to back-face culling would also have been rejected by Z occlusion, so it’s not valid to simply add the savings from the two techniques.

- Z pre-pass: Another technique is to draw the entire scene while skipping steps 5 and 6, so only the Z buffer is updated. Then the scene is drawn again, but step 3 is changed to test for an exactly equal Z value, and step 4 is eliminated. This guarantees that steps 5 and 6 are only performed once per pixel, for the front-most triangle. However, step 3 must now be performed twice as many times, and all the vertex transformation and triangle setup work not accounted for here must be done twice. Whether this results in an appreciable overall savings depends on many factors.

- Skip frame buffer clear: If the rendered scene is indoors and covers the entire screen, then the frame buffer clear in step 2 can be omitted. That’s a savings of 37 MB/s.

- Skip Z-buffer clear: If the rendered scene covers the entire screen, then the Z-buffer clear in step 1 can also be omitted, but sacrificing one bit of Z-buffer accuracy. On even frames, the low half of the Z-buffer range can be used. On odd frames, the high half can be used, along with a reversal in the sense of direction, so larger values are treated as being closer to the camera. This means that every Z value from an even frame is farther away than any Z value from an odd frame, so each frame effectively clears the Z-buffer for the next one. This provides a savings of 55 MB/s.

- Texture compression: Compression formats like DXT1 can provide a 4:1 or better compression ratio for texture data. If the rasterizer can be structured so that an entire texture is read into a cache, and then used for calculations on many adjacent pixels, this can translate directly into a 4:1 bandwidth savings on step 5. Assuming less than perfect gains of 2:1, that translates to a savings of 18 MB/s.

- Texture cache: Neighboring pixels on the screen are likely to access the same texels, when the textures are drawn magnified. A texture that’s tiled many times across the face of a triangle may also result in many reads of the same texel. The expected savings depend on the particular model that’s rendered, but are probably similar to those for texture compression, or about 18 MB/s.

- Tiled Z-Buffer: The Z-buffer can be divided into many 8×8 squares, with a small amount of state data cached for each square: the farthest point (largest Z value) in the square, and a flag indicating if the square has been cleared. That’s 25 bits per square, or 15 KB for a 640×480 Z-buffer. That should fit in the FPGA’s block RAM. Then when considering a pixel before step 3, if the pixel’s Z value is larger than the cached Z-max for that square, the pixel can be rejected without actually doing the Z-buffer read. Furthermore, when the Z-buffer needs to be cleared, the cleared flag for the block can be set without actually clearing the Z-buffer values. Then the next time that Z-buffer square is read, if the cleared flag is set, the hardware can return a square filled with Z-far without actually reading the Z-buffer values. This skips both a Z write and a Z read for the entire square. In order to gain the benefit of the cleared flag, the hardware must operate on entire 8×8 blocks at once before writing the result back to the Z-buffer. The total savings for both these techniques is at least 110 MB/s, and possibly as much as 165 MB/s depending on how much is occluded with the square-level Z test.

- Z-buffer compression: 8×8 blocks of Z-buffer data can be stored compressed in memory, using some kind of differential encoding scheme. Like the previous technique, this would require the hardware to operate on an entire 8×8 block at a time in order to see any benefit. The cost of all Z-buffer reads and writes might be reduced by 2:1 to 4:1, at the cost of additional latency and hardware complexity to handle the compression. This could provide a savings in the range of 350 MB/s.

Unfortunately the savings from all these techniques can’t merely be summed, and the savings I’ve estimated for each one are assuming it’s done by itself, without any of the other techniques. However, when used together, the combination of backface culling plus Z-occlusion should provide at least 400 MB/s in savings, texture compression and caching another 30 MB/s, and Z-buffer tiling another 110 MB/s. That lowers the total bandwidth needs down to 326 MB/s, roughly the same as my conservative estimate of real-world available bandwidth.

Read 3 comments and join the conversationFPGA Pong

Everyone working on a video application using an FPGA seems to start with Pong, so why should I be any different? I put together this Pong demo as an exercise to help get more familiar with Verilog, and gain some experience working with the Xilinx tools and the Spartan 3A FPGA starter kit. It was very slow going at first, but things are slowly beginning to make sense to me now. And hey, I’ve got Pong!!

pong.v – Verilog HDL source pong.ucf – user constaints file, with pin-mappings for the Spartan 3A starter kitSome of the ideas were taken from fpga4fun’s Pong tutorial, and the quadrature decoding logic for the rotary knob was ripped from the tutorial verbatim.

I found that the most difficult part to get working was the collision detection. My brain kept getting tripped up by the difference between writing software where statements are executed sequentially, and HDL statements defining a bunch of operations that all happen at once. I ended up with something like this:

always @(posedge clk) begin if (collided) direction <= !direction; if (direction) position <= position + 1; else position <= position - 1; end

That looks fine for sequential code, but in hardware it didn’t work. When the ball reached a point where a collision was detected, the hardware would switch the direction and increment the position simultaneously. The position increment used the old direction value, since it was happening in parallel. The result was that the ball would move one step deeper into collision territory. On the next clock, it would move in the new direction, but since it had been two steps into collision territory, one step wasn’t enough to get out, so another collision was detected and it reversed direction yet again. This caused the ball to get stuck in the wall. Confused? Me too.

I ended up solving this by introducing an XOR:

always @(posedge clk) begin

if (collided)

direction <= !direction;

if (direction ^ collided)

position <= position + 1;

else

position <= position - 1;

end

I think there must be some more elegant solution, but I didn’t find it.

I also had trouble handling the initial conditions, setting the ball position and direction to appropriate values at startup. Normally I’d do this with a reset line, but I’m not sure how to do it in an FPGA. The Spartan 3A starter kit doesn’t provide any kind of reset input that I could find in the documentation. And at any rate, it would need to reset the logic of the design, without resetting the FPGA itself, which clears the design entirely until it’s programmed again.

I later discovered from James Newman that “initial” blocks are actually synthesizable, so you can do something like:

initial begin position <= 200; end

That blew my mind, because I must have read 100 different Verilog guides that say initial blocks can’t be synthesized into hardware. And yet, it’s true. I’m very curious how this actually works.

I want to review the Pong design, because it feels way more complicated than it needs to be. I described this to James as Verilog making me lazy. It seems way too easy to write some complicated expression involving a dozen equality comparisons, greater/less than comparisons, and ANDs and ORs, when with a little more thought, the logic could probably be substantially simplified. For instance, I think

wire ball = (xpos >= ballX && xpos <= ballX+7);

could be rewritten as

wire [9:0] delta = xpos - ballX; wire ball = (delta[9:3] == 0); // or even wire ball = ~(|delta[9:3])

which only requires a 10-bit subtractor and a 7-input NOR, instead of two 10-bit comparators and a 2-input AND. Or maybe:

reg[2:0] ballCount; always @(posedge clk) begin if (xpos == ballX) ballCount <= 7; else if (ballCount != 0) ballCount <= ballCount - 1; end wire ball; assign ball = ballCount != 0;

That’s a 3-bit down counter, and a bunch of XORs and NORs. I’m not sure if that’s better, but you get the idea. Once you start writing complex clauses of nested ifs and lengthy boolean expressions, it’s easy to lose sight of the underlying hardware implementation.

Read 11 comments and join the conversationMemory Bandwidth

I did some preliminary memory bandwidth calculations for 3D Graphics Thingy, based upon the discussion in the comments of the previous post, and the numbers aren’t encouraging. Even for the simplest possible case, I don’t think there will be enough bandwidth to do what I’m imagining, let alone any more complex cases involving more interesting rendering effects.

For every pixel, every frame, this is the minimum that must be done:

- Clear the z-buffer, at the start of the frame

- Clear the frame buffer, at the start of the frame

- Read the z-buffer, when a new pixel is being drawn

- Write the z-buffer, if the Z test passes

- Write the frame buffer, if the Z test passes

- Read the frame buffer, when the display circuit paints the screen

That’s 6 memory operations, per pixel, per frame. Assuming a pixel is 3 bytes (one byte each for red, green, and blue), a z-buffer entry is also 3 bytes, the frame buffer is 640 x 480, and the refresh rate is 60 Hz, then that’s:

6 * 3 * 640 * 480 * 60 = 316MB/sec

If the DRAM datapath is 16 bits wide, as is common, then that’s 158 million memory transactions per second, so the DRAM must run at 158MHz. This is maybe within the realm of possibility, but not by much, I think.

A more realistic estimate would involve steps 3, 4, and 5 happening many times, as fragments of different triangles overlap the same pixel. Performing alpha blending would add an additional “read the frame buffer” step for each pixel. And drawing textured triangles rather than flat-shaded ones would involve one or more additional texture memory reads for each pixel. A more realistic memory bandwidth estimate for a scene with an average depth complexity of 4, with alpha blending and texturing, is probably about 1.4 GB/second, requiring a memory speed of 700MHz. That’s definitely out of reach.

There are certainly some tricks I could use to improve things, starting with using several DRAMs in parallel. Unfortunately each new DRAM requires about 40 FPGA pins to interface with it, and since I need to limit myself to low pin-count FPGAs that can be hand-soldered, realistically I probably can’t do more than two DRAMs in parallel. Using a smaller frame buffer or fewer bits per pixel would also help, but that’s trading away image quality, which I’d like to avoid.

Caching seems like it should play a role here, but I’m not sure exactly how. If there are many shader units operating in parallel, they all must keep their caches in sync somehow, or share a single cache. And even if there’s only one shader unit, so cache coherency isn’t an issue, it’s not obvious to me that a traditional cache would actually speed things up. A pixel shader won’t spend lots of time manipulating the same few bytes of memory over and over, the way a CPU does when executing a small loop. Instead, it traverses the interior of a triangle, visiting each pixel exactly once. The next triangle it processes is unlikely to have any overlap with the previous one. Given these patterns, a cache probably won’t help.

Read 14 comments and join the conversationFPGAngst

I’ve spent the past few days getting familiar with my Xilinx Spartan 3A Starter Kit, and so far, it’s not going well. I’d thought I was pretty competent with the basics of digital electronics, and the concepts of HDL programming and FPGAs. But working through a “blink the LED” example using the Xilinx ISE WebPack software has been an exercise in frustration. The learning curve is more like a brick wall, and I’m getting dizzy from banging my head into it over and over.

I’ll begin with the starter kit itself. Given the name, you might think it’s aimed at people who want to get started with FPGAs. Forget it. I was very disappointed to find that the starter kit came with almost no documentation at all. Instead, it just had a DVD with a two year old version of the ISE software (now two major releases out of date), and a leaflet with a URL to find more information. The only printed documentation was for the Embedded Devlopment Kit, which is a separate product and doesn’t even work with the free version of the Xilinx ISE. Following the URL, I found the manual for the starter kit, but it’s little more than a catalog of all the hardware on the board. If you want any kind of tutorial for an FPGA “hello world” using this board, or a high-level overview of the various steps involved in creating and programming an FPGA design, or any kind of “starter” information at all, you’ll have to look elsewhere.

Plowing through the ISE software on my own, the first issue I faced was the need to choose what FPGA model I wanted to target. You might think there would be a predefined choice for “Spartan 3A Starter Kit”, but you’d be wrong. After some digging, I found that the starter kit has a XC3S700A, but that wasn’t enough. I needed to specify what package it was, and what speed grade too. How do you tell this? It’s mentioned nowhere in the starter kit manual. After about 20 minutes of searching around, I finally managed to find the web page that deciphered the tiny, near-illegible numbers printed on the chip to determine the package and speed. It’s FG484-4, if you’re keeping score at home.

The ISE itself is really bewildering. It’s basically a shell application that coordinates half a dozen other tools, each of which has its own UI and terminology. The other tools look like old command-line apps that someone slapped together a GUI for using Tcl/Tk. The ISE uses a strange (to me at least) “process” metaphor, which is a context-sensitive subpanel that fills with different actions, depending on what you’ve selected in the main GUI. It took me two days of hunting to figure out what I needed to click on to make the simulation-related process options magically appear. The processes are also arranged in a hierarchical list, so in most cases, running a process requires running all the ones in the tree before it. I still haven’t figured out how to check if my Verilog compiles without doing a complete synthesize, place, and route for the entire design.

Other ISE headaches:

- The GUI-based Plan Ahead tool used to assign signals to physical pins bears no relation to the text-based UCF (user constraints) file examples in the starter kit online manual.

- ISE keeps getting confused about the UCF file, and I have to remove it from the project and re-add it. It’ll complain that I don’t have a UCF file, then when I try to add one, it complains there already is one.

- Integration with iMPACT (the programming tool) is apparently broken. ISE says it’s launching it, but doesn’t. iMPACT must be launched manually.

- After using a wizard to create a DCM module to divide the input clock by two, there’s no “results” page or other info that defines what ports the wizard-created module has. It doesn’t let you actually look at the module code: clicking on it just relaunches the wizard. I had to go poke through random files on disk to discover the module ports.

In comparison to the software, the hardware itself seems pretty good, but maybe a little TOO good. There are no less than four different configuration EEPROMs that can be programmed, with a complicated system of jumpers for controlling which one to program and which to use at startup. This just makes life more complicated than it needs to be.

The only big negative about the hardware is that there’s no SRAM at all. I don’t know how I missed this when I was looking at the specs. Instead, it has 64MB of DDR2 SDRAM. Yeah, that’s a lot of RAM, but creating a memory controller interface for DDR2 RAM is a big honking complicated task all in itself. That means that if you want to do any kind of project involving RAM, you either need to be content with the few kilobytes of block RAM in the FPGA itself, or go on a long painful detour to design a DDR2 memory controller first. The 133MHz oscillator for the DDR2 RAM also occupies the only free clock header, so it’s impossible to introduce another clock to the design (for example, a 25.175MHz oscillator for generating VGA video).

Stumbling blindly through the software, I did finally manage to design, simulate, program, and run a simple example that blinked three LEDs. I’m sure everything will make more sense in time, but it’s hard for me not to feel grumpy right now. I feel like I’m spending all my energy wrestling with the tool software, and none on the project itself. In short, it feels like a software project, not a hardware one. I’ve barely touched the board, other than to plug in the USB cable and flip the power switch. My multimeter, chip puller, wire stripper, and other tools sit unused in my toolbox. Instead, I’m spending time reading a lot of manuals and guessing at what some opaque piece of software is actually doing under the hood. The experience with the clock generation wizard was downright depressing: it just writes some HDL code for you and doesn’t even let you see it, so you’re at least two levels removed from having a prayer of actually understanding what’s going on. Since my end goal in all my homebrew hardware is to gain a better understanding of how things work, that’s especially galling.

I’m going to search out some more ISE tutorials and any other good learning tools I can find, but I’m also going to take another look at the Altera tools. I’ve heard that the Altera software is more beginner-friendly, but I went with Xilinx becuase their starter kit appeared more powerful. I’m now realizing that the quality of the software tools and ease of the development experience is much more important than the number of gates on a particular FPGA. Altera’s Cyclone II starter kit isn’t as full-featured as the Xilinx kit I have now, but it’s decent, and it has some SRAM too. More than likely, the Altera tools will just be a different flavor of incomprehensibility, but it’s worth a look.

Read 37 comments and join the conversationGoodbye BMOW, and a Contest

It’s a bittersweet day today. After 18 months of development, occupying the top of my desk and the majority of my spare time, BMOW 1 has been officially retired to the closet. I packed up the case, packed up the power supply, keyboard, cables, EPROM programmer, and everything else. At least it had a good send-off party at the Maker Faire. Now I can actually see the surface of my desk for the first time since 2007, and I’m ready to turn my full attention to my next project.

It’s a bittersweet day today. After 18 months of development, occupying the top of my desk and the majority of my spare time, BMOW 1 has been officially retired to the closet. I packed up the case, packed up the power supply, keyboard, cables, EPROM programmer, and everything else. At least it had a good send-off party at the Maker Faire. Now I can actually see the surface of my desk for the first time since 2007, and I’m ready to turn my full attention to my next project.

To mark the occasion, I’m running a small contest, with ten fabulous BMOW stickers as the prize. If you’ve followed my progress for a while, then you know that the “C” key doesn’t work in BMOW BASIC, due to a bug related to control-C handling that I never bothered to fix. The contest is simple: write a BMOW BASIC program that prints the letter C to the screen, without typing “C” as part of the program. This isn’t as easy as it might seem, since many of the relevant BASIC keywords also have the letter C in their name.The first person to reply with a working solution, as judged by me, will receive the stickers by mail, and the honorary title of “BMOW Guru”. It’s not quite like being knighted, but it’s close.

Some hints:

- BMOW BASIC is a straight port of Microsoft BASIC, but does not include any machine specific commands for file I/O, graphics, etc. Do a Google search to learn more about MS BASIC keywords.

- The video memory is not mapped anywhere into the BASIC address space, so you can’t just POKE a byte into screen memory.

- Try this Javascript Applesoft BASIC interpreter, which is also a Microsoft BASIC variant. But remember, BMOW BASIC isn’t 100% identical to Applesoft. Try the BMOW simulator on the Downloads page if you’re unsure.

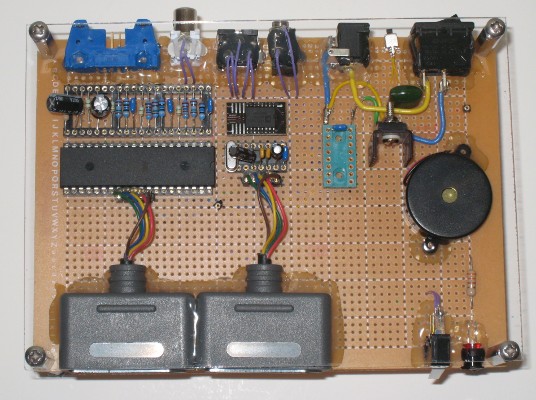

Uzebox, Take 2

It’s done. Yes, it cost twice as much money and 100X as much time as just buying one from Adafruit or Sparkfun, but I’ve finished my home-made Uzebox.

Notable features vs. the “stock” Uzebox:

- vertical mount

- mini-stereo jack for audio

- internal speaker

- transparent acrylic case

- lots of glue

And it plays Arkanoid! Now I can return to my regularly scheduled life. 🙂

I wrote about the Uzebox earlier: it’s an open-source hardware project utilizing a microcontroller to synthesize an NTSC video signal on the fly, in software. Many classic games have been ported to it, and there’s an active developer community.

Read 11 comments and join the conversation