Archive for July, 2008

Video System Design

The BMOW video system design is done! Save for that one question about the correct current source setup that I mentioned previously, everything is nailed down to the last detail. I tried to cram in the maximum amount of flexibility that I possibly could, without making the hardware requirements too crazy. All told, I think I spent about a month working on the design. Now I just need to build the darn thing, and pray that it actually works!

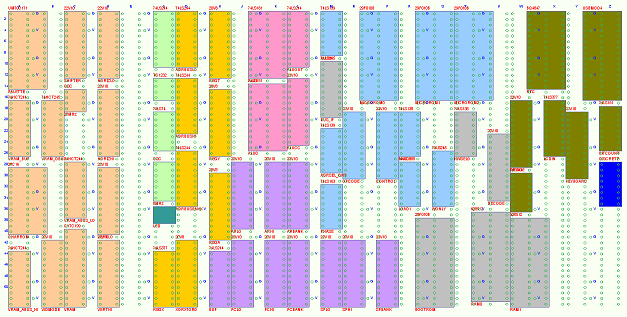

The finished design uses 14 ICs and a handful of other components, making it BMOW’s biggest subsystem. Keeping the required board area small was an important goal, since I’ve nearly filled the BMOW system board, but I’d like to leave some free space for an eventual audio subsystem. As it turned out, I used about 80% of the remaining free space for the video components, which leaves a small but non-zero area for audio. The video system components are drawn in tan, at the left side of the board layout diagram below. Click the image to see a bigger version.

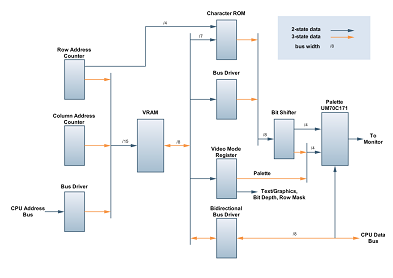

The video system supports display resolutions up to 512×480, with up to 256 colors possible at lower resolutions. A 64-column text mode is also provided, with a hardware cursor and 8×16 font glyphs stored in a character ROM. Bitmapped graphics support a variety of resolutions and color depths. Many video settings can be changed on a line-by-line basis, allowing text and graphics using different palettes and color depths to be mixed within a single screen. The following block diagram shows the major functional units. Click on the image to see a larger version.

Overview

All video data is stored in a 32K static RAM. During normal operation, 4 GALs serve as row and column counters, generating the required VRAM addresses as well as sync, blank, and other timing signals. When the CPU wishes to read or write to VRAM, the row and column counter outputs are disabled, and the CPU address is driven onto the VRAM address bus. Because there’s no explicit synchronization between the CPU and the video circuitry, the CPU may access VRAM while the video circuitry is drawing the visible portion of a line to the screen. If that happens, video noise or “snow” will appear briefly on the screen. While this video snow is undesirable, I’m hopeful that it will be tolerable in practice. The snow can also be avoided entirely, by constraining the CPU access through software so that it only occurs during the vertical blank period, when nothing is being drawn to the screen.

In graphics modes, the VRAM data is passed directly to the bit shifter without translation. Text mode operates differently, however. Text VRAM data is used as the address to the character ROM, along with the lowest 4 bits of the row count, to select the right portion of the font glyph to display. The selected portion of the font glyph is then passed to the bit shifter. Seven bits of VRAM data are used to select the character, providing for 128 different characters. The eighth bit is passed to the bit shifter as an “inverse” flag, so any character can be made to appear inverted by changing a single bit in VRAM. This can be used to indicate the cursor position, or to highlight a region of text.

In some video modes, the first byte of row data defines mode settings for that line on the screen. The character ROM can be turned on and off, palette selections can be changed, and color depth can be modified, all within different portions of the same screen. For example, a game might set up a screen that’s predominantly in 16 color mode. But it might set one palette of 16 colors for the upper half of the screen, another palette of 16 colors for the lower half, a small area of 256 colors along the top border for a status display, and a row of text along the bottom border for the score. For those modes that use it, the mode settings are latched directly from VRAM into the VIDMODE register at the beginning of each line. For the other modes, VIDMODE is latched once per frame, during the non-visible portion of the frame.

Once data reaches the bit shifter, it’s shifted out at a rate dependent on the current color depth, and passed on to the palette chip. For 2, 4, and 16 color modes, the bit shifter provides four bits of the palette data, and the VIDMODE register provides the other four. In effect, using VIDMODE allows for an additional four bits of data per pixel, but those four bits are shared by every pixel on the same line. In 256 color mode, the bit shifter provides all eight bits of the palette data, and the VIDMODE output is disabled.

The video circuitry uses the UM70C171 palette chip that I’ve mentioned several times before. It combines a 256-entry palette RAM and three D-to-A converters into a single chip. A similar result could be achieved by using a standard SRAM for the palette and three separate DACs, but at the cost of increased complexity and required chip count.

Video Modes

Four bits of control data govern the basic video resolution settings: two for the vertical resolution, and two for the horizontal. Decreasing the horizontal resolution increases the color depth, so that the number of data bytes per line remains constant, with some exceptions. Some modes use the first byte or two of row data for mode settings, with a consequent reduction in horizontal resolution. The character ROM may be turned on in any mode, but will only produce recognizable characters in 2 color mode. In the other modes, the font glyphs will effectively become small colored bitmaps, which might permit for some interesting effects. The 16 primary video modes are:

| 0 | 504×200 @ 2 colors | mode byte per line, 63×25 text with character ROM |

| 1 | 504×200 @ 4 colors | mode byte per line |

| 2 | 252×200 @ 16 colors | mode byte per line |

| 3 | 126×200 @ 256 colors | mode byte per line |

| 4 | 512×240 @ 2 colors | 64×30 text with character ROM |

| 5 | 512×240 @ 4 colors | |

| 6 | 256×240 @ 16 colors | |

| 7 | 128×240 @ 256 colors | |

| 8 | 504×400 @ 2 colors | mode byte per line |

| 9 | 252×400 @ 4 colors | mode byte per line |

| 10 | 126×400 @ 16 colors | mode byte per line |

| 11 | 63×400 @ 256 colors | mode byte per line |

| 12 | 512×480 @ 2 colors | |

| 13 | 256×480 @ 4 colors | |

| 14 | 128×480 @ 16 colors | |

| 15 | 64×480 @ 256 colors |

It looks like a pretty random assortment of resolutions and colors, but they all fall directly from the use of the resolution control bits. A few notes:

- Check out mode 12, at 512×480! That’s a lot of resolution.

- Mode 7 is probably best for displaying digital photos or smooth color gradients.

- Mode 2 at 252×200 and mode 8 at 504×400 have the pixels that are closest to being square.

- The 200 and 400 line modes are handy for adjusting the pixel aspect ratio. Otherwise the higher resolutions of the 240 and 480 line modes are probably more useful.

For applications where there’s lots of animation happening, significant amounts of VRAM data may need to be modified by the CPU each frame. If this becomes prohibitive, a bit in the VIDMODE register can be set to enable line-doubling mode. In this mode, the least significant bit of the row count is masked out, so that only data for the even rows is read from VRAM, and the same line is repeated for odd rows. This cuts in half the amount of data that must be modified by the CPU, and enables reducing the vertical resolution to as low as 100.

For the curious, I’ve posted the complete schematic diagrams and GAL equations for the video circuitry. Find a bug, or suggest an improvement!

Food for Thought

A few things didn’t work out as I’d hoped with the design. The idea of changing the video settings line-by-line is cool, but it complicated matters quite a bit, and I never did manage to iron it all out perfectly. I think that modes 9, 10, and 11 will use the mode byte correctly, but will also display it as a visible pixel. Oops.

One tricky wrinkle I didn’t anticipate is that some modes involve different byte rates than others. For 512 horizontal pixels at 1 bit-per-pixel (2 colors), a new byte must be loaded from VRAM every 8 pixels. But for all other modes, a new byte must be loaded every 4 pixels. That means that the pipeline delay is different, so the time between column count zero and the first visible pixel of the line appearing on screen is different. If combining 1-bit lines with 2, 4, or 8-bit lines in the same screen, the lines will start 4-pixels apart horizontally, so the left and right screen edges will look ragged. What’s worse, I can’t even generate the correct timing signals to make ragged lines, since I’ve run out of space in all the programmable GALs. I discovered this only at the 11th hour. My work-around is to use the same timing signals for all lines, regardless of color depth. This suppresses the first pixel of 2, 4, and 8-bit lines, and tacks it on to the end of the line instead. I can compensate for this wrap-around behavior in software, but it’s a pain.

Read 4 comments and join the conversationCurrent Source Confusion

I’m about ready to call the BMOW video system design officially complete, and hopefully I’ll be posting specs soon. I’ve run into one last snag, however. The UM70C171 palette chip requires a reference current to calibrate the DAC output. The official datasheet shows a few examples of how to do this, and I’m following the example that uses a LM334 current source in a temperature-compensated configuration shown on page 3-109. Their formula for how to choose the resistor values to get the desired current doesn’t seem to make sense, though, and doesn’t agree with the LM334 datasheet. Anyone see where I might be misinterpreting things? John Honniball mentioned that he had a copy of the IMS G171 datasheet, which should be pin-compatible. Does it say anything different?

The UM70C171 datasheet says I need a 4.44mA reference current for a singly-terminated output, and that the relationship of the resistor R1 to the current is IREF = 33.85mV / R1. Solving for R1 yields about 7.5 ohms, which is the value they show in the accompanying diagram. Also shown in the diagram is a second R2 resistor with a value of 75 ohms, which isn’t mentioned in the text.

In contrast, the LM334 datasheet on page 5 shows an example of using it in the same temperature-compensated configuration, with different results. It says the ratio of R2 to R1 should be 10:1, and IREF = 0.134V / R1. Solving for R1 yields R1 = about 30 ohms and R2 = 300 ohms. That’s a substantial difference from what the UM70C171 datasheet says.

The coefficient in the formula from the LM334 datasheet is almost precisely 4 times the coefficient from the UM70C171 datasheet, which I’m sure is significant somehow. I feel like I must be missing something obvious.

Read 5 comments and join the conversationDecisions, Decisions

I honestly cannot make up my find about whether to use this salvaged UM70C171 VGA palette chip as part of my video system design. I’ve changed my mind four times already in the past couple of days. I think I’m going crazy!

Previously I thought there would be a tradeoff for using the palette chip, and I’d need to give up some flexibility in supported bit depths and palette switching to use the chip, as compared with my original design that didn’t use it. But I’ve since worked out a few improvements to the design that avoid needing to give up anything.

From a technical standpoint, then, using the palette chip has nothing but advantages. It combines an 18-bit dual-port palette RAM and all the required D-to-A conversion hardware into a single chip.

- The required board space for the video system would be reduced by the equivalent of about 3 chips. That’s not a huge amount, but it would make a big difference in how much audio circuitry I could fit on the board, plus any other 11th hour things I’ve forgotten.

- The palette entries would be 18 bits, 6 bits per channel, compared with the 8 bit, 3/3/2 bits per channel of my custom-built output stage. That would produce much nicer looking colors when displaying digitized images or smooth color gradients, and it would completely sidestep the whole question of how to best map 8 bits to 256 colors that I wrote about last week.

- I’m less likely to encounter any problems related to high-frequency operation if I use the palette chip. It’s rated to run at up to 66MHz. With the custom output circuit, I’d have to be very careful to use high speed parts and account for the timing requirements everywhere.

- The D-to-A conversion would probably produce cleaner results than my custom circuit. There was a fair bit of noise in the video test circuit I built a few weeks ago, and the final circuit would probably suffer from some of the same problems. Also, the palette chip should produce nearly equal changes in brightness for equal changes in RGB values, which may not be the case for my custom DAC. I’m not even certain that my custom DAC will produce a brighter color for binary 100 than 011.

If I do use the UM70C171 palette chip, it will only replace about 25% of my original video system design. I’ll still need to build the circuits to generate the row and column addresses, video sync signals, VRAM, character ROM, and data latching. The only part that would be different is how a byte of video data, once it’s retrieved from VRAM or ROM, gets converted into analog RGB voltages for the monitor.

So why *wouldn’t* I use this palette chip? It sounds like a no brainer. The only reason is really a lingering feeling that it’s somehow “cheating”, or isn’t as interesting an accomplishment to build a video system if part of it is a purpose-built component scavenged from a VGA card. It’s the same argument that I went through with myself six months ago about whether to use GALs in BMOW’s design. Ultimately I did use GALs, and it’s worked well, and there’s no way I could have fit everything on the existing board without them. So from a practical standpoint the palette chip would be a big win. I guess I just need to swallow my pride a little, or let go of the “not invented here” attitude, and use what works.

Read 9 comments and join the conversationApple II Machine Language Monitor

While waiting for the parts I need for the video system, I ported the machine language monitor from the Apple II ROMs to BMOW. Somehow I’d never gotten around to writing an interactive program to examine and modify memory, which I’ll certainly need once I start trying to poke bytes into video memory and see something on the screen. Rather than write my own monitor program, I decided it would be interesting to port the Apple II’s. BMOW’s instruction set is mostly a superset of the 6502 CPU in the Apple II, and the monitor program’s source code is listed in the famous Apple II “Red Book”. It was written by Steve Wozniak and Allen Baum in 1977. I figured it shouldn’t be too hard to convert it for BMOW’s purposes. After a longer than usual amount of fiddling I have it mostly working now, but it proved to be a major pain in the ass! Here’s a photo of the monitor program in action, showing a disassembly of itself.

The monitor is pretty darn impressive for a 2K program. Its features:

- Examine a single memory location or a range of addresses

- Disassemble a program at a given address

- Store one or more user-supplied bytes beginning at a given address

- Block move memory

- Compare two blocks of memory

- Run a program at a given address

- Single step a program at a given address

- Perform hexadecimal math using a built-in calculator

The original Apple II version also included an assembler, as well as functions to read and write data from a cassette tape. Ah, those were the days!

To port the monitor program to BMOW, I expected I’d need to solve a couple of problems:

- Replace the original keyboard input and screen output code with BMOW equivalents

- Adapt the output for a 20×4 LCD, instead of the original 40×24 television

That wasn’t too difficult. The bigger problem I hadn’t expected was that BMOW was much less 6502-compatible than I’d thought. In particular, which condition codes are set by which instructions differed quite a lot between the 6502 and BMOW. It turned out that the Apple II monitor relied substantially on the fact that certain instructions like INC would set the zero and negative flags, but leave the carry flag untouched. BMOW wasn’t designed to work that way, and unfortunately it took me many hours of digging through the source code to understand how it worked before I picked up on this detail, and even longer to find and fix all the instances where it caused bugs. My BMOW simulator proved to be extremely valuable, and I even spent some time to add rudimentary source-level debugging to the simulator. The good news is that I found and fixed a lot of BMOW microcode bugs, and I was eventually able to find a way to change BMOW’s condition code usage to match the 6502’s by reprogramming a GAL, with no wiring changes at all. And now I have a working monitor program!

After spending all that time in the monitor source code, I’ve come to know it pretty well, and I have to say that Woz and Baum must be crazy! OK not crazy, but they certainly approached programming much differently than people would today. The program makes widespread use of some pretty bizarre techniques which I’m sure were intended to economize every last byte. Functions are constantly calling into the bodies of other functions to borrow a little snippet here or there, so it becomes difficult to identify which bits of source code are related to any particular program feature. Conditional branches are used in many cases where it’s obvious that the condition will always evaluate true, which I think is because the conditional branch instruction takes less program space than an unconditional jump.

Beyond that, it also looks as if they intentionally designed the program so that certain parts of it would end up at very specific memory addresses. The program is precisely 2048 bytes. There are NOPs and padding bytes in a couple of places, and in a few places I saw a bigger instruction used where a smaller one could have been. For example, some code does a branch to another instruction, which is a RTS (return from subroutine). Why not just put the RTS in directly instead of having the branch? It seems that Woz and Baum may have started with goal addresses in mind for portions of the program, so that certain address arithmetic would work out nicely and save a few instructions, and then they went out of their way to make sure those goal addresses were achieved.

Maybe Woz or Baum or someone else who knows will follow up and explain what they were thinking; I’d be curious. Years ago I once received a shareware registration from Woz for a Macintosh game I wrote, it was a $10 check (signed simply “Woz”). In retrospect it might have been fun to hang on to, but I cashed it. After that we exchanged a couple of emails, though, and I even sent him a game T-shirt. I wonder if it’s still wedged at the bottom of his dresser drawer.

Read 11 comments and join the conversationVideo Palette Setup

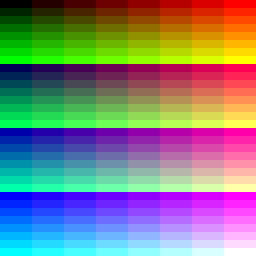

I’ve been thinking some more about the best way to set up the palette for BMOW’s video system. It’s the only part of the design that I’m not really happy with. There are a number of different options I’m considering. Here’s a summary of each one, along with a sample palette image showing all the possible colors. I’ve tried to arrange the colors in each sample in the most pleasing way, but try not to let the ordering of the colors sway your opinion.

8-bit palette entries, RRRGGGBB format

This is the current plan. Two alternative orderings of the same 256 colors are shown here. Each entry is a single byte, with 3 bits of red, 3 bits of green, and 2 bits of blue. It’s simple to understand and build, and requires two 3-bit DACs (digital to analog converter) and one 2-bit DAC. There are 256 possible colors, and the division of bits between red, green, and blue is asymmetric. Only 8 or 4 intensities of any given hue are possible. For grayscale, it’s limited to either 4 shades of gray, or eight shades where some “grays” have too much or too little blue, since there’s less precision for blue than for red and green.

This is the current plan. Two alternative orderings of the same 256 colors are shown here. Each entry is a single byte, with 3 bits of red, 3 bits of green, and 2 bits of blue. It’s simple to understand and build, and requires two 3-bit DACs (digital to analog converter) and one 2-bit DAC. There are 256 possible colors, and the division of bits between red, green, and blue is asymmetric. Only 8 or 4 intensities of any given hue are possible. For grayscale, it’s limited to either 4 shades of gray, or eight shades where some “grays” have too much or too little blue, since there’s less precision for blue than for red and green.

8-bit palette entries, HHHIIIII format

Each entry is a single byte, with three bits of hue and five bits of intensity. The three hue (H) bits are used to switch the R, G, and B color outputs on or off. Then for any color outputs that are on, the five intensity (I) bits define the intensity for all of them. There are still only 256 possible colors, but it’s symmetric in that all the color channels are treated identically, and it permits 32 intensities per hue. It requires a single 5-bit DAC whose output is used for all three color channels, and three transistors to switch the analog outputs on and off according to the H bits (I think this would work– I need to sketch up an appropriate analog circuit). The big drawback is that there are only 8 hues, so some colors like orange and brown can’t be rendered. Also, 39 of the 256 possible colors are black, which seems wasteful. That’s the entire top row and left column in the sample image.

Each entry is a single byte, with three bits of hue and five bits of intensity. The three hue (H) bits are used to switch the R, G, and B color outputs on or off. Then for any color outputs that are on, the five intensity (I) bits define the intensity for all of them. There are still only 256 possible colors, but it’s symmetric in that all the color channels are treated identically, and it permits 32 intensities per hue. It requires a single 5-bit DAC whose output is used for all three color channels, and three transistors to switch the analog outputs on and off according to the H bits (I think this would work– I need to sketch up an appropriate analog circuit). The big drawback is that there are only 8 hues, so some colors like orange and brown can’t be rendered. Also, 39 of the 256 possible colors are black, which seems wasteful. That’s the entire top row and left column in the sample image.

8-bit palette entries, HHHHIIII format

In theory this would yield 16 hues, with 16 intensities per hue, which sounds like a decent compromise. In practice, I can’t think of a simple way to define 16 hues from the four H bits. Three bits of H are easy to treat as on/off controls for the R, G, and B channels, but four bits? If anyone can think of an obvious way to map eight bits of HHHHIIII to individual R, G, B outputs, I’d love to hear it. The image shows one possible mapping, but it’s a big switch statement in the program I wrote to generate the sample images, and I’m not sure how I’d implement it efficiently in hardware. Certainly some extra chips would be needed in the output logic.

In theory this would yield 16 hues, with 16 intensities per hue, which sounds like a decent compromise. In practice, I can’t think of a simple way to define 16 hues from the four H bits. Three bits of H are easy to treat as on/off controls for the R, G, and B channels, but four bits? If anyone can think of an obvious way to map eight bits of HHHHIIII to individual R, G, B outputs, I’d love to hear it. The image shows one possible mapping, but it’s a big switch statement in the program I wrote to generate the sample images, and I’m not sure how I’d implement it efficiently in hardware. Certainly some extra chips would be needed in the output logic.

8-bit palette entries, RRGGBBSS format

Each entry is one byte, with four bits each for R, G, and B. However, the least-significant two bits for each color are shared among all the colors. There are 256 possible colors in total. They consist of 64 basic hues, and each hue can be biased brighter by 4 intensity levels, with the total intensity bias being as much as one-quarter of the total black-to-white intensity range. It requires four 4-bit DACs, but no other special output logic.

Each entry is one byte, with four bits each for R, G, and B. However, the least-significant two bits for each color are shared among all the colors. There are 256 possible colors in total. They consist of 64 basic hues, and each hue can be biased brighter by 4 intensity levels, with the total intensity bias being as much as one-quarter of the total black-to-white intensity range. It requires four 4-bit DACs, but no other special output logic.

8-bit palette entries, RRGGBBII format

This is very similar to RRGGBBSS format, except that the extra two bits are used to set the overall intensity of the pixel, rather than as the least significant bits of the color data. Each of the 64 primary hues can be rendered at 25%, 50%, 75%, or 100% of normal intensity. It’s a similar idea to the extra half-brite mode from the Amiga. The advantage over RRGGBBSS format is that there’s more fidelity among the darker colors. There is some redundancy in the palette, however: red = 01 (binary) with 100% intensity is the same as red = 10 (binary) with 50% intensity. I’m not sure what the best way to implement this in hardware would be. Possibly as a 2-bit intensity DAC, whose output is used as the reference voltage for three more 2-bit color DACs.

This is very similar to RRGGBBSS format, except that the extra two bits are used to set the overall intensity of the pixel, rather than as the least significant bits of the color data. Each of the 64 primary hues can be rendered at 25%, 50%, 75%, or 100% of normal intensity. It’s a similar idea to the extra half-brite mode from the Amiga. The advantage over RRGGBBSS format is that there’s more fidelity among the darker colors. There is some redundancy in the palette, however: red = 01 (binary) with 100% intensity is the same as red = 10 (binary) with 50% intensity. I’m not sure what the best way to implement this in hardware would be. Possibly as a 2-bit intensity DAC, whose output is used as the reference voltage for three more 2-bit color DACs.

15-bit palette entries, RRRRRGGGGGBBBBB format

Each entry is two bytes, with five bits each for R, G, and B. There are 32768 possible colors. It requires three 5-bit DACs. Since palette entries are twice as large as the 8-bit formats, it also requires two palette RAMs arranged in parallel. Storing the data in a single RAM that’s twice as big won’t work, because all 15 bits for the pixel need to be output simultaneously. It also requires two output registers to latch the output of the palette RAM, instead of one. Overall this would provide the best-looking results, but require the most hardware, and double the amount of palette data that the CPU needs to generate.

Each entry is two bytes, with five bits each for R, G, and B. There are 32768 possible colors. It requires three 5-bit DACs. Since palette entries are twice as large as the 8-bit formats, it also requires two palette RAMs arranged in parallel. Storing the data in a single RAM that’s twice as big won’t work, because all 15 bits for the pixel need to be output simultaneously. It also requires two output registers to latch the output of the palette RAM, instead of one. Overall this would provide the best-looking results, but require the most hardware, and double the amount of palette data that the CPU needs to generate.